Data Quality

Data Quality is currently available on Snowflake, Databricks, and BigQuery Data Planes.

Overview

Data Quality checks are assertions about the dataset associated with a component. It measures objective elements such as completeness, accuracy, and consistency. Ascend provides built-in Standard and Custom Data Quality Checks using SQL queries. Once the data is ingested or transformed, the Quality Checks run before Ascend marks the component as UP TO DATE.

There are two types of Data Quality Checks in Ascend:

- A Standard Check uses a set of predefined operators to express the validation rule. The following operators are supported:

NOT NULL,>,>=,<,<=,IN,NOT IN, orUNIQUE. At runtime, Ascend constructs aSELECT COUNT(*)query with the configured operators and fails the check if the result count is greater than the configured threshold. - A Custom Check allows the user to write a custom SQL statement that returns records that do not pass the check. The check is failed if the number of records returned exceeds the configured failure threshold.

Data Quality Checks at the Component & Partition Level

Each Data Quality check can be configured to run either at the component level or at the partition level. Component-level checks run on the entire output dataset of the component. Partition-level checks run on individual partitions whenever they are added to the dataset. Partition-level checks are more efficient for incremental data pipelines by avoiding repeatedly running the same queries on the entire dataset.

For Standard Checks, the Ascend UI sets the Default Run On setting to By Partition by default. For a comprehensive overview of how partitioning with Ascend works, see Data Partitioning. For Custom Checks, the Ascend UI sets the Run On setting to By Component by default. These settings are also configurable through the API and SDK. The default is partition-level if the test level is not configured.

Failure Behavior and Threshold

Each Data Quality check can be configured to respond to a failure by Returning a Warning, Raising an Error, or Doing Nothing. When Return a Warning is selected, failures of the Data Quality check result in a warning getting logged but allow processing to continue. When Raise an Error is selected, failed checks result in processing for the component to stop. When configuring a Data Quality check in the Ascend UI, the failure behavior is set to Return a Warning by default.

The threshold at which a check is considered to have failed is also configurable. Currently, you can only access and set failure thresholds for warning and failure behaviors of checks through the Ascend SDK or API. In the Ascend UI, the threshold is automatically set to 0.

Example: For a Standard Check of NOT NULL configured with a threshold of 10 and failure behavior set to Return a Warning, Ascend will check how many rows have null values, raising a warning if more than 10 such rows are found.

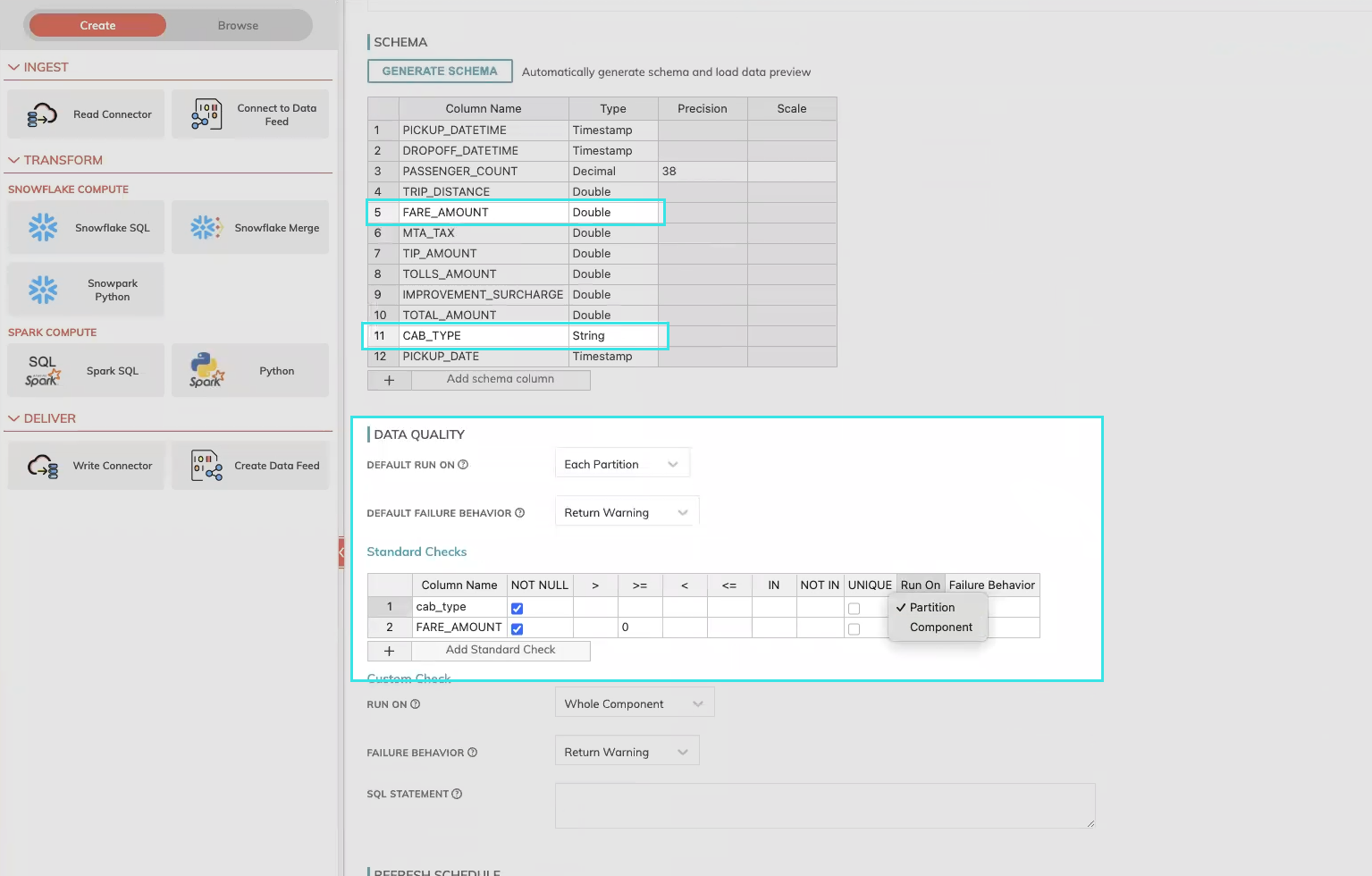

Configuring Standard Checks in the UI

Standard Checks allow you to set specific checks for each column with the option to allow for a default value of "run on" and "failure behavior." This allows greater control over prioritizing data integrity by column. When configuring a Read Connector or Transform, complete the following steps before clicking on CREATE.

Step 1: Set the Default Run On and Default Failure Behavior (Optional)

The Default Run On setting configures the default validation target (either Each Partition or the Whole Component) for all column checks. The Default Failure Behavior setting configures the default failure behavior (either Return Warning, Raise Error, or Do Nothing) for all column checks.

Step 2: Add a new Standard Check and specify a column

Select + Add Standard Check to add a new standard check row. Using the generated schema, identify the column to run the quality check on. Insert the column name into the Column Name field of the new row.

Step 3: Set the quality check parameters

Configure the desired failure conditions for the check. Available operators are: NOT NULL, >, >=, <, <=, IN, NOT IN, or UNIQUE.

The Run On and Failure Behavior columns allow you to override the default behavior set in Step 1.

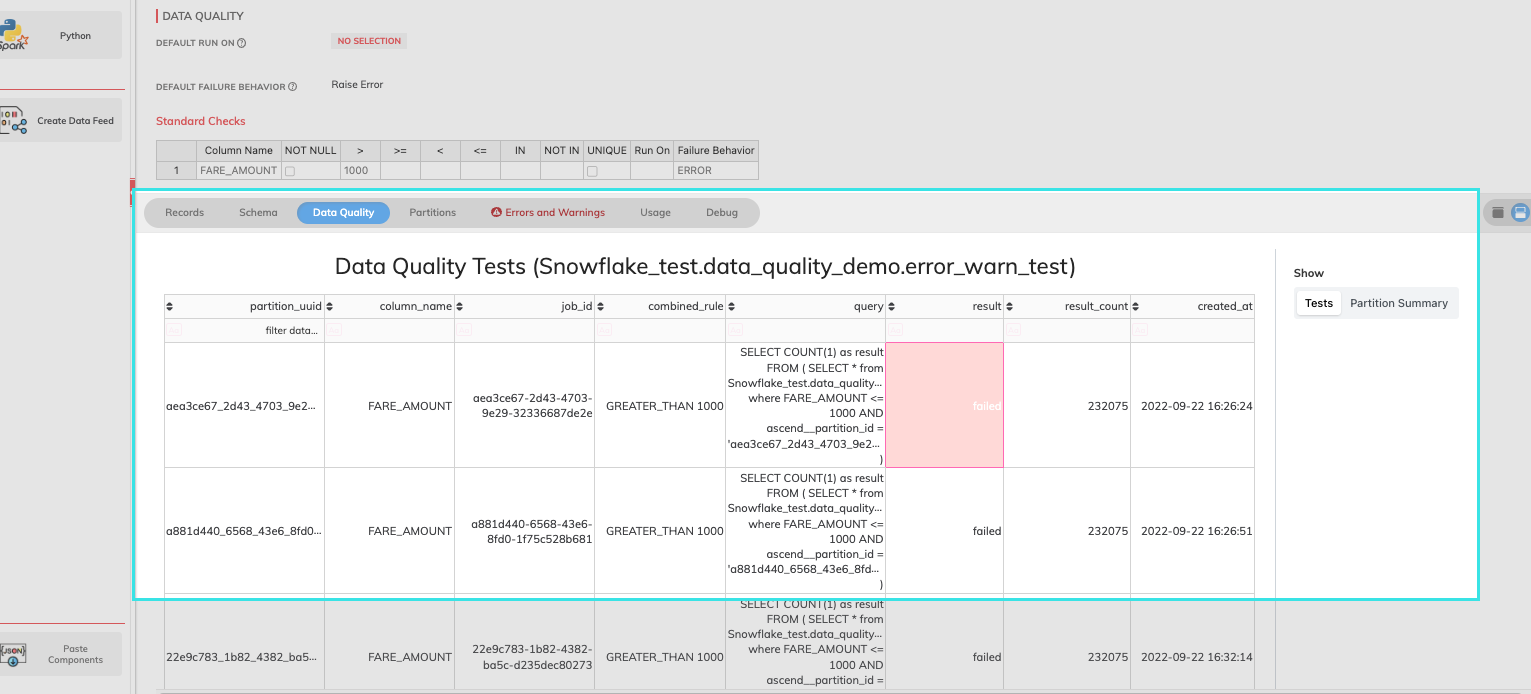

Figure 1. Creating Standard Checks.

Step 4: Add additional column checks

Repeat Steps 2 and 3 to add any additional column checks.

Once your Standard Check parameters are set, continue the configuration for your Read Connector or Transform.

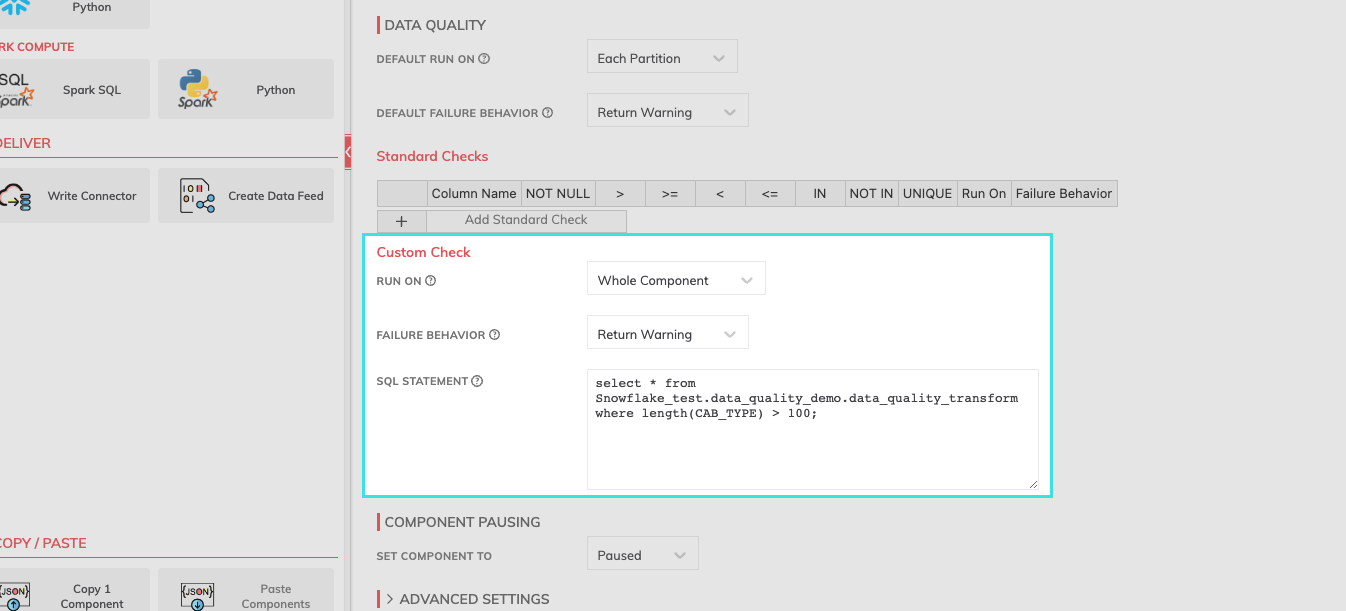

Configuring Custom Checks in the UI

Custom Checks provide the ability to create custom SQL queries to run against the data when the capabilities of Standard Checks are not sufficient to describe the failure conditions. When configuring a Read Connector or Transform Connector, complete the following steps before selecting CREATE.

Step 1: Set the Run On and Failure Behavior

The Run On setting configures the validation target (either Each Partition or Whole Component) for the Custom Check. The Failure Behavior setting configures the failure behavior (either Return Warning, Raise Error, or Do Nothing) for the custom check.

Step 2: Custom SQL

Create and insert your custom SQL query.

Figure 2. Creating Custom Checks.

Once your Custom Check query is set, continue the configuration for your Read or Transform Connector.

Reviewing Data Quality Check Errors and Warnings

The results of Data Quality checks in two ways in the Ascend UI: through visual indicators on the component graph, and in the Data Quality Tab of the Component Details pane. Additionally, Ascend stores results of the Data Quality Results in a table in the Data Plane alongside the output data tables.

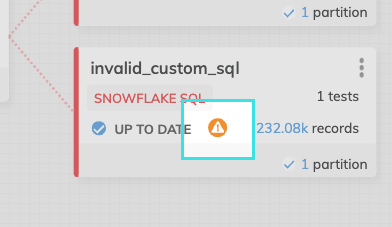

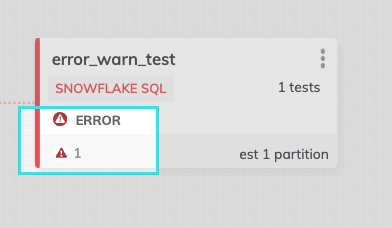

Error and Warning Icons in Component Graph

Warning and Error states can be quickly gleaned from a Component in the Component Graph.

Figure 3. A component with a failed check configured with the Return Warning failure behavior.

Figure 4. A component with a failed check configured with the Raise Error failure behavior.

Data Quality Tab in Component Details Pane

The Data Quality Tab in the Component Details Pane provides details for each check that was run against the data set.

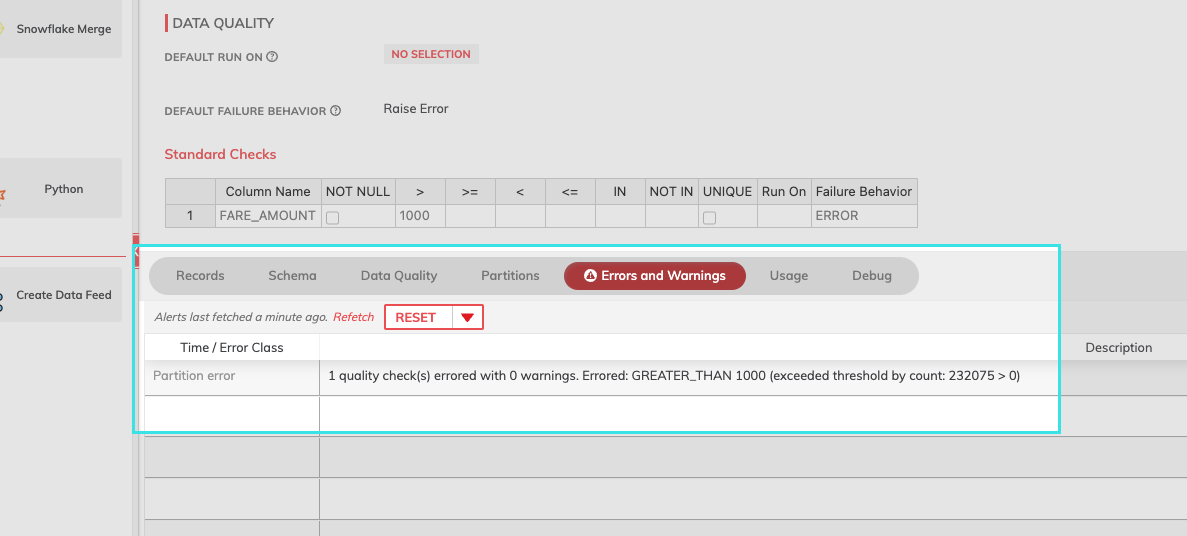

Figure 5. Standard Check set to Raise Error showing the partition error in the Data Quality tab.

When configured with Raise Error, a failed check also results in an error message displayed in the Errors and Warnings tab.

Figure 6. Standard Check set to Raise Error showing partition error on Errors and Warnings tab.

Data Quality Checks Table

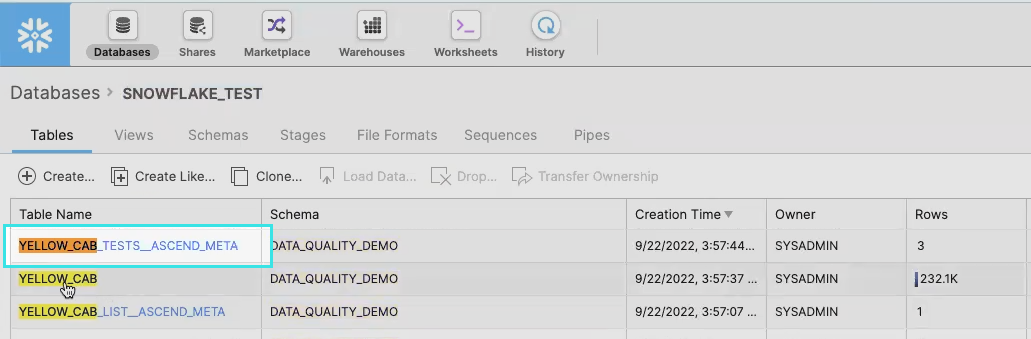

For any Data Plane, both Standard Checks and Custom Checks will store data quality test queries and results in a new table. The table will appear in the same database/schema as the original data table with the name <TABLENAME>_TESTS__ASCEND_META.

In this example, data is being ingested into a Snowflake Data Plane by a component named YELLOW_CAB. The table name containing the quality test results is YELLOW_CAB_TESTS__ASCEND_META.

Figure 7. Ascend data quality checks table in Snowflake.

Data Quality Checks through Ascend SDK and API

Data Quality Checks can be configured through the SDK and API. These are also the only way in which non-default failure thresholds for checks can be configured (in the Ascend UI, the threshold is always set to 0).

Data Quality Check Results in Transforms

You can retrieve the results for Data Quality Checks that were run on a component by using a special syntax in a SQL Transform, in a SQL query. The historical data provided can be used in the dataflow and downstream, for example, to push to external systems for analytics and/or reporting purposes.

For example, imagine a Read Connector named my_component. You can return the Data Quality Check data (visible on the Data Quality tab in the component UI) directly in a SQL Transform and then use that result just like any other component in a query.

To get the Data Quality Check Test Results, just refer to {{my_component.test}} as the component name in the SQL query (where my_component refers to the name of your component which has tests enabled, and has test results already generated). The Data Quality Check Test Results will be returned as the result of the SQL Transform.

The SQL Transform returns the Data Quality Check Test Results from an upstream component, not for the SQL Transform itself. For example, use it to return test results from an upstream Read Connector or Transform.

See the example below:

Figure 8. Data Quality Check Results returned in Transform.

Questions?

We're always happy to help with any other questions you might have! Send us an email, ping us on Slack, or chat with us to your right ![]() .

.

Updated about 2 months ago