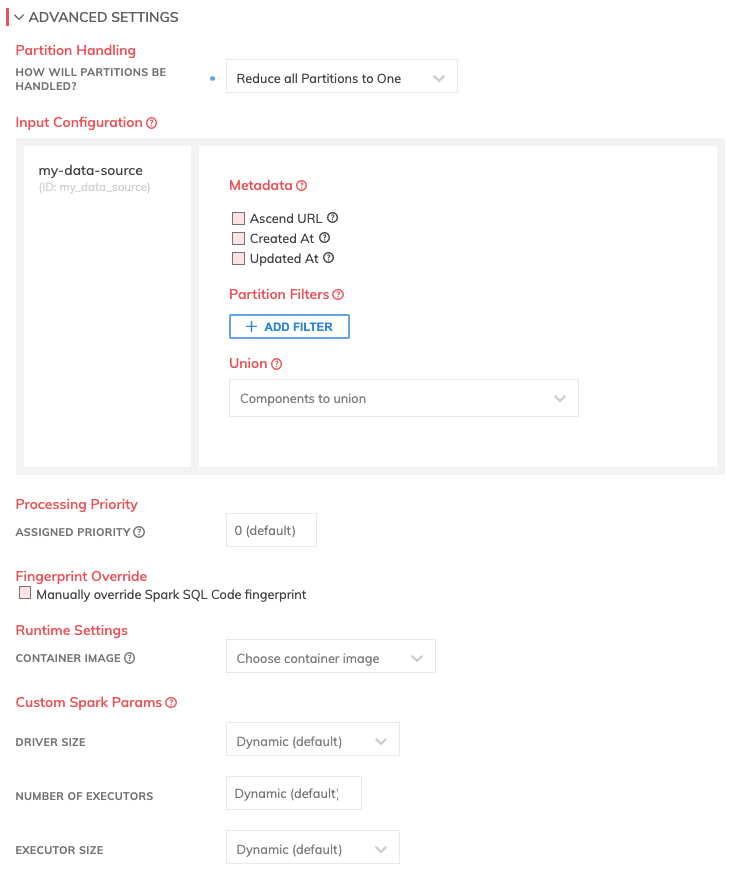

Advanced Settings (Transform)

Configuring Advanced Settings

All transforms share a common set of advanced settings. These settings enable advanced scenarios that give users more control over how their data is processed in the transform.

Partition Handling

Ascend Partitions and partitioning strategies for transforms are described in detail in this related documentation.

Input Configuration

The input configuration section of Advanced Settings allows you to apply operations to partitions before running transformation logic. Each input to the transform is identified by its component identifier in Ascend, and each input configuration block is specific to each input.

- Metadata: adds the specified internal Ascend metadata columns/attributes to the partition so these columns can be used within transformation logic. Read the Transform metadata documentation for more details on the metadata columns that can be added.

- Partition Filters: allows the user to specific filter logic to filter the partitions that transform logic will be applied to. This can be used to filter by a static value, or another value within the same partition.

- Union All: partition union allows the user to union all the specified partitions with the specified input partition. This is a "partition-level" union all, which is a little bit different than a record-level SQL UNION ALL - but the result from the user's perspective is somewhat similar. Records appear as if they were combined using SQL UNION ALL, but at the partition level, the partitions remain independent, atomic partitions, that are combined together to represent the complete data for the given component. Users can effectively think of this as "gluing all the partitions together" into a single component. Like a UNION ALL, duplicates are preserved and are not removed.

Processing Priority

- Assigned Priority: When resources are constrained, Processing Priority will be used to determine which components to schedule first. Higher priority numbers are scheduled before lower ones. Increasing the priority on a component also causes all its upstream components to be prioritized higher. Negative priorities can be used to postpone work until excess capacity becomes available.

Fingerprint Override

This overrides the fingerprint for the code SHA hash of the current transform. In normal operation, Ascend automatically calculates a SHA hash of the transform's code content. This hash is used by the Ascend platform to detect changes to the code, and propagate changes downstream from the component. In the case where you do not want the component to refresh/recalculate when code is changed, you can assign a static Fingerprint Override Code.

Warning: For Experienced Users OnlyThe fingerprint override can have side effects that may be undesirable and should be used by experienced users only. Most users will not ever need to use this setting and should avoid it.

If you wish to do this, check the "Manually override fingerprint" checkbox. Then configure the static fingerprint value as needed based on the description below.

- Overridden Code Fingerprint: When this is set, Ascend will treat this static value as the unique code SHA hash to represent the component's code, instead of calculating a hash based on the transform code itself. A common practice is to use a globally unique identifier (e.g. a v4 UUID/GUID) as the static value. This value must be globally unique within Ascend, or unexpected and/or undesirable side effects may occur. If the static override fingerprint is manually changed, then the component will be considered to have changed and be refreshed/recalculated (and changes propagated downstream).

Runtime Settings

- Container Image: This option allows you to choose which Docker image will be used to run the code in the transform. The default (if no other value is specified) is "Use Data Service image".

- Use Data Service image: By default, the container image to use is controlled by each data service. Review the Data Service container image documentation for more detail on how to configure the container image at the Site and/or Data Service level.

- Native Ascend Spark: These are native Spark images developed and supported by Ascend and contain the standard pip packages described here. When you select this option, you are given an additional option to choose the Spark runtime to use. You may do this, for example, to maintain compatibility with an older version of Spark, if required.

- Image label: If custom, labeled container images have been registered in the Admin > Cluster Management > Custom Docker Images settings (see docs here), they will be available here to choose.

- Container image URL: This option lets you choose a container image by image name, as well as a corresponding runtime to use.

Custom Spark Params

Based on the size of the data and the amount of computation needed to be done, Ascend will, by default, dynamically determine the size of the Spark drivers, and the size and number of Spark executors needed. However, you can adjust these parameters as needed.

Adjusting the Spark Params from their default settings requires Spark expertise. Ascend typically automatically adjusts these parameters based on the data being processed for the user. For guidance on recommended settings, please contact Ascend.

- Requires Credentials: If you need to pass any credentials to your transform code, check

Requires Credentials. These credentials will then be passed through thecredentialsparameter in thetransformfunction.- Choose Credentials: You can either create new credentials or choose from existing credentials.

- Driver Size: Determine the cloud instance size of your Spark drivers, with options such as Small, Medium, Large, or X-Large.

- Number of Executors: Select the number of Spark executors per Spark driver.

- Executor Size: Determines the cloud instance size of each Spark executor, with options are Small, Medium, Large, or X-Large.

Updated 8 months ago