Data Partitioning

Optimizing for efficiency and performance in your dataflows

In managing data with Ascend, understanding how to effectively partition your data is crucial for optimizing incremental data management and scaling robustly. Let's dive into how you can apply this in your projects.

Understanding Partitions in Ascend

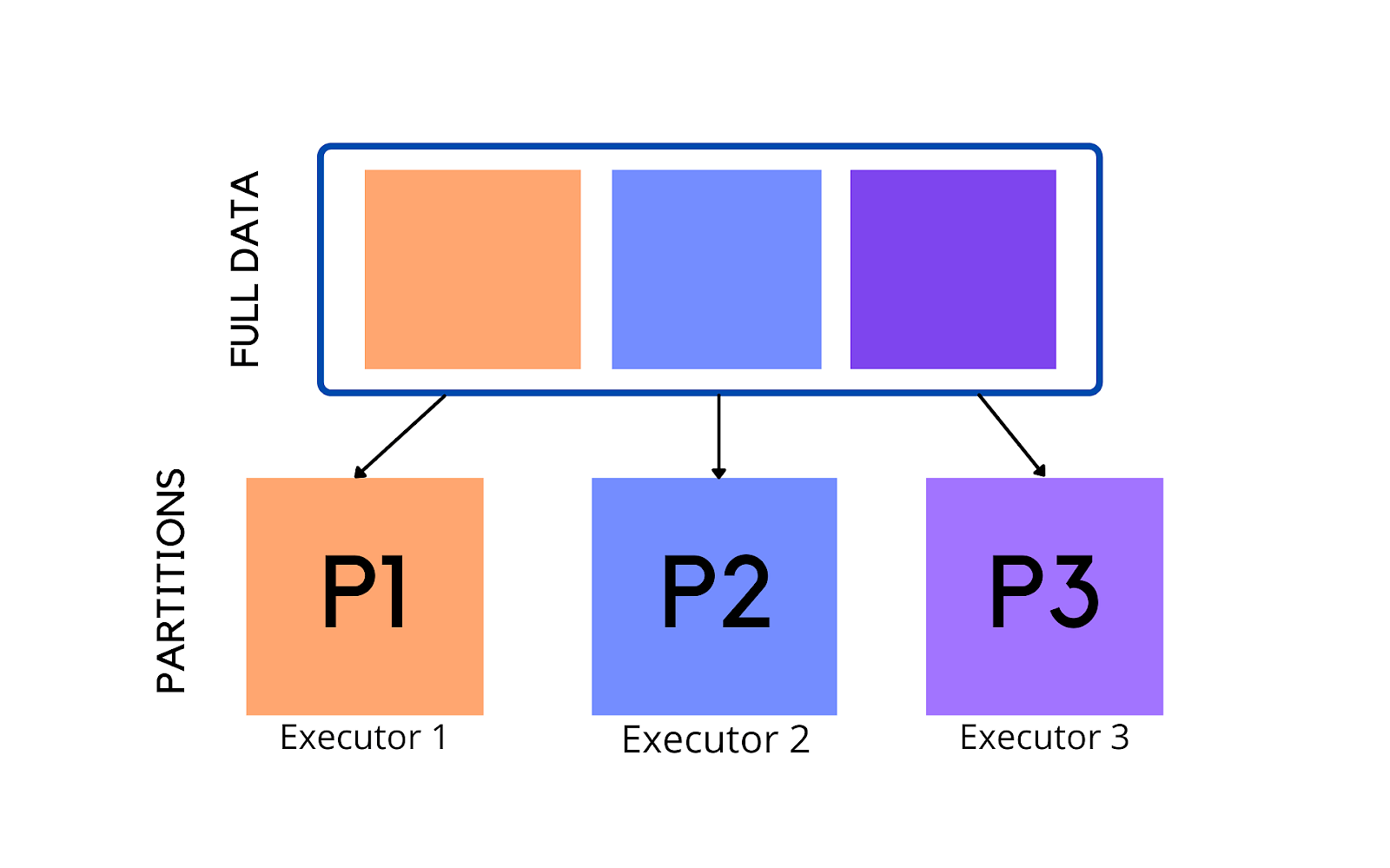

A partition in Ascend is essentially a collection of records. When you work with any Ascend component, it automatically partitions its data set. This helps in two ways:

- Incremental Data Management: Ascend processes only those partitions that are new, updated, or deleted.

- Robust Scaling: Each partition is processed as a distinct Spark task, enhancing performance.

Let's go old school for a second. Think of partitioning like publishing an encyclopedia set (the hard copy ones you still find in a library for some reason). If there's a need to update information on a specific topic, only the relevant volume (partition) is revised. This is similar to how Ascend selectively processes only the new, updated, or deleted partitions, thereby enhancing efficiency and managing resources better.

Designing Your Partition Strategy

When planning your data flow, consider the following to select the right partitioning strategy:

Number of Total Tasks

The number of tasks generated by partition operations varies. For instance, if you have an upstream Read Connector with 500 partitions (5 million records over 50 days), here's how different strategies pan out:

- Full Reduction: This strategy creates just one task to process all 5 million records.

- Timestamp Partitioning: If you use daily partitions, you'll end up with 50 tasks, each handling about 100,000 records.

- Mapping: This approach generates 500 tasks, with each handling around 10,000 records.

A Full Reduction is often more efficient than Mapping or Timestamp Partitioning for downstream Transforms, as processing many small tasks can be resource-intensive and time-consuming.

A Full Reduction approach is like rewriting the entire encyclopedia set for every minor update, while Timestamp Partitioning and Mapping are like updating only the specific volumes where changes occurred. The latter approaches are more efficient, since they avoid the unnecessary work of revising unaltered volumes.

Incremental Data Loading

Ascend's data flows are designed to run continuously. When new data arrives at the Read Connector, Ascend automatically triggers an incremental data loading process, updating all components in the Dataflow. In this context:

- Full Reduction Transform: Processes all historical data with each new update.

- Mapping and Timestamp Partitioning Transforms: Do not require reprocessing the entire historical dataset with each new increment.

For example, with a new file making it 501 partitions and 5,010,000 records:

- Full Reduction will process all 5,010,000 records.

- Mapping and Timestamp Partitioning will process only the new 10,000 records.

Using Mapping and Timestamp Partitioning can be more efficient, but use Full Reductions if required by your business logic.

Let's say you want to add a new volume to an encyclopedia set called α-δ. With Full Reduction, you rewrite the entire set, including the new volume. In contrast, Mapping and Timestamp partitioning would only require updating or adding the new volume, leaving the rest of the set unchanged. This approach is more practical and less time-consuming... and less costly.

Ascend Partitions vs. Spark Partitions

It's important to differentiate between Ascend and Spark partitions:

- Ascend Partition: Focuses on incrementality, tracking changes and new data. For example, a new S3

LISToperation might determine that 2 new partitions (representing 2 files) have shown up, and 1 previous partition (1 file) has changed content. - Spark Partition: Deals with parallelism and physical data storage during processing, aiming to minimize shuffling and I/O operations.

Partitioning in Spark

Using .repartition(n) or .coalesce(n) in Ascend

.repartition(n) or .coalesce(n) in AscendWhile Spark's repartition and coalesce methods change the number of partitions in an RDD or DataFrame, they do not affect Ascend partitions.

For example, if a Read Connector with 500 Ascend partitions is followed by a Pyspark Transform that maps one-to-one with Ascend partitions, calling .partition(10) will redistribute each partition's records across 10 Spark partitions for processing. However, it's usually best to avoid explicitly calling .repartition(n) or .coalesce(n) and let Spark determine the appropriate partition count.

Going back to our encyclopedia set: we've decided we want to rearrange how the volumes are ordered. Ascend's partitioning decides which topics go into which volume (for easy updating), while Spark's partitioning organizes these volumes in the library for efficient access and space utilization (how they fit on a shelf). Using.repartition(n)or.coalesce(n)is like redistributing the topics among a new set of volumes, which can be an unnecessary hassle if not done for a specific purpose.

Ascend, BigQuery, and Snowflake Partitioning

Many data management and pipeline platforms handle partitioning differently. With Ascend, partitioning is unique in that it’s highly incremental and dynamic. This approach means efficient and targeted data processing, as only the partitions with new, updated, or deleted data are processed. This method is particularly effective for workflows that require ensuring that the data pipeline remains agile and responsive to changes.

Snowflake partitioning, known as micro-partitioning, manages data partitioning behind the scenes. It organizes data into small, immutable chunks (micro-partitions) that are optimized for storage and retrieval. This process is transparent to the user, focusing on optimizing query performance and storage efficiency at scale. Snowflake's approach offers less control and granularity compared to Ascend's partitioning, where specific partitioning strategies can be tailored to suit the unique needs of a dataset.

BigQuery primarily partitions data based on time-based criteria, such as daily or monthly intervals. This method is highly efficient for large-scale data analyses and queries, especially when dealing with time-series data, as it reduces the amount of data scanned during queries, thereby optimizing performance and cost. However, it's less flexible compared to Ascend's approach when it comes to handling diverse data types and incremental updates.

Let’s look at our encyclopedia set in the larger context of a library.With Ascend partitioning, you’re the editor telling the librarian how to arrange topics and which topics need updating. Only volumes with new or changed topics are revised, making the entire set agile and up-to-date in seconds rather than going through a whole publication process.

Snowflake partitioning leaves updating your encyclopedia to the librarians. They’re more interested in making sure the content of the topics fit within the predefined bounds of the book sizes and shelf capacity. The topics may be less related to one another, meaning when you, as the editor, need to make changes to several topics beginning with the letter “A”, you’ll have to update and reprint several volumes.

BigQuery partitioning organizes your encyclopedia topics by time, creating volumes for each day or month. This is great for quickly accessing information from specific periods, like how librarians group magazines and newspapers together. If you know the time period, you can easily make updates. However, if your topic spans multiple years or even skips a year, you have to again update each volume.

Updated 8 months ago