Azure Blob Read Connector (Legacy)

Creating an Azure Blob Storage Read Connector

Prerequisites:

- Access credentials

- Data location on Azure Blob

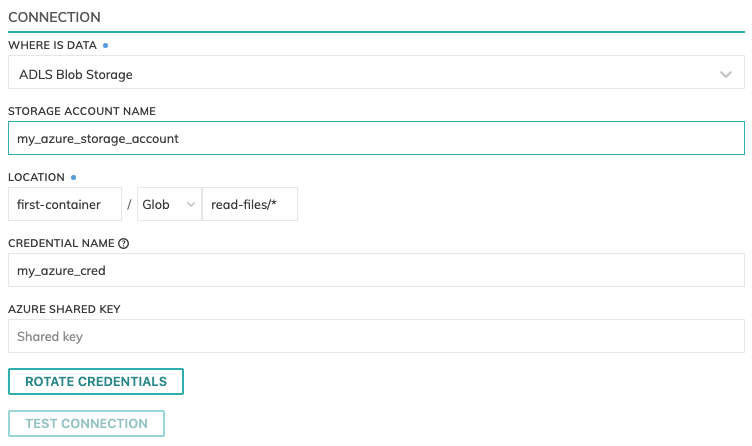

Connection Settings

Connection Setting specifies the details to connect to the particular Azure Blob location.

Here's an example:

Storage Account Name

Enter the Storage Account Name for the subscription.

Location

Azure Blob Read Connector have location settings comprised of:

- Azure Blob Container: This is the container name within the Storage Account, such as

first-container. - Pattern: The folder path to identify eligible files:

Azure Shared Key

This is the Access Key for the Storage Account that can be located within the Storage Account page under Settings -> Access Keys.

Testing Connection

Use Test Connection to check whether all Azure Blob permissions are correctly configured.

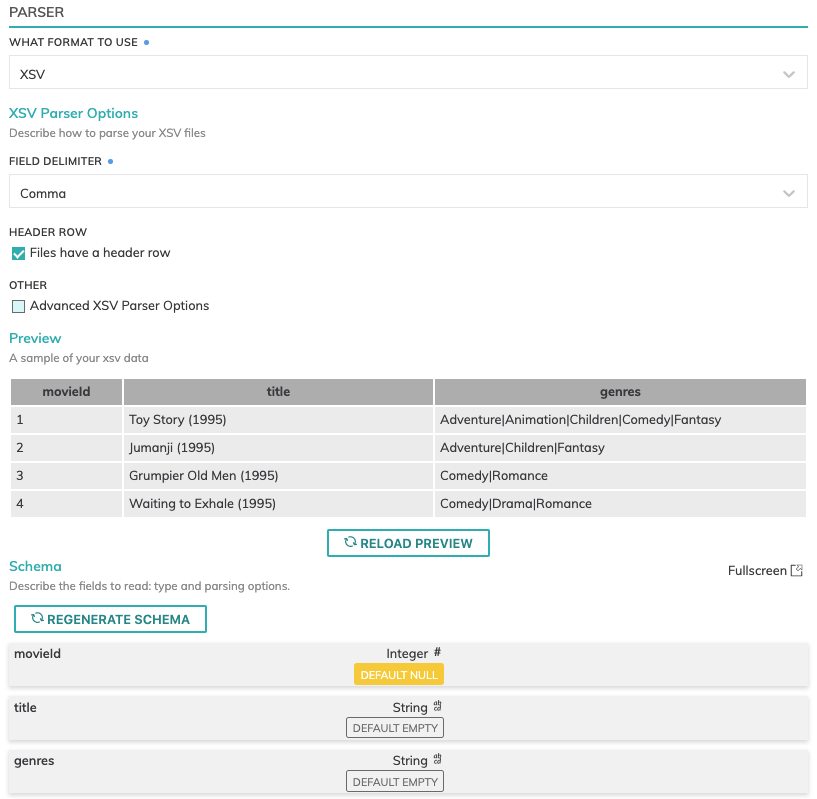

Parser Setting

Data formats currently available are: Avro, Grok, JSON, Parquet and XSV. However, you can create your own parser functions or define a UDP (User Defined Parser) to process a file format.

Schema information will automatically be fetched for JSON, Parquet and XSV files with a header row.

Here's an example:

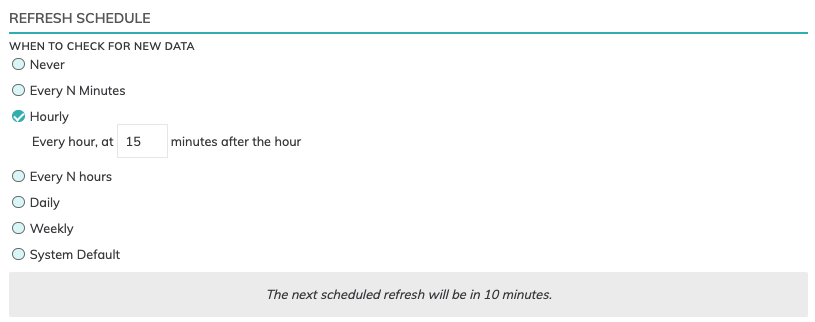

Refresh Schedule

The refresh schedule specifies how often Ascend checks the data location to see if there's new data. Ascend will automatically kick off the corresponding big data jobs once new or updated data is discovered.

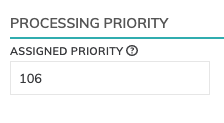

Processing Priority (optional)

When resources are constrained, Processing Priority will be used to determine which components to schedule first.

Higher priority numbers are scheduled before lower ones. Increasing the priority on a component also causes all its upstream components to be prioritized higher. Negative priorities can be used to postpone work until excess capacity becomes available.

Here's an example:

Updated 8 months ago