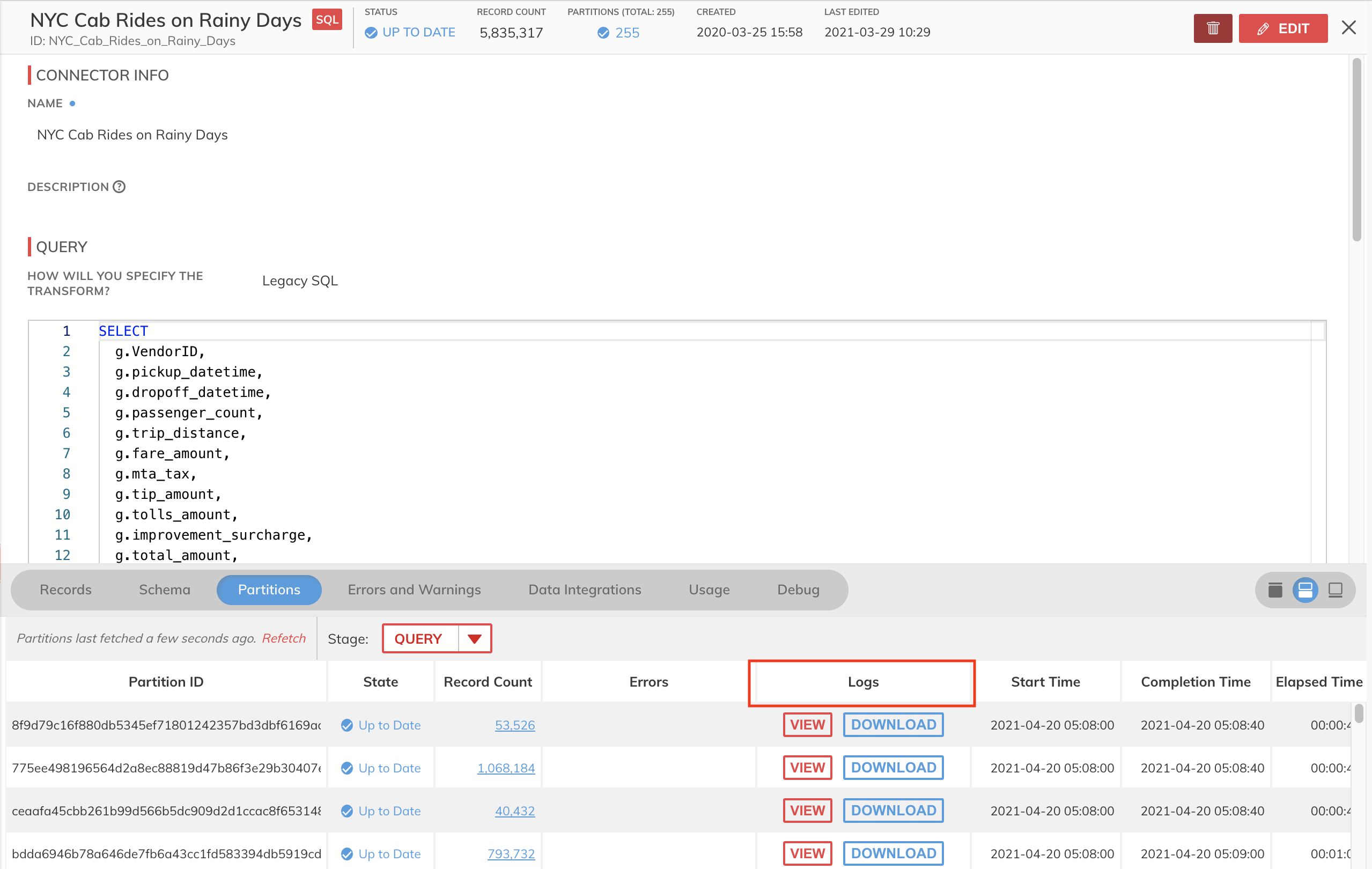

Component Logs

Ascend components retain the Spark logs per partition of data processed. The UI surfaces these logs under the "Partition" tab, where developers can view them in the UI or download the files. Developers can also programmatically fetch and/or download logs through the Ascend SDK.

Logs Availability

Ascend manages Spark logs for Read Connections and Spark transformations (SQL, PySpark). Write Connectors and Read Connectors (Legacy)] do not currently support logging.

Ascend surfaces logs of "in-progress" Spark jobs, which allows for viewing logs and refreshing to see the most up to date output of the logs.

Accessing Logs from the UI

- Locate a component on the Dataflow that you wish to view logs for.

- Open the component detail view.

- Navigate to the "Partitions" tab.

- Under the "Logs" column, click on either View to open the logs in a new browser tab or Download to download a folder of the log files.

Instrumenting Logs in PySpark

Developers can instrument their own logging in a PySpark transform and have the log statements appear in the partition logs. This logging must go through the Ascend logging interface in order to add the correct logging labels and route to the correct partition.

A small example:

from pyspark.sql import DataFrame, SparkSession

from typing import List

import ascend.log as log

def transform(spark_session: SparkSession, inputs: List[DataFrame], credentials=None) -> DataFrame:

df = inputs[0]

log.info("I am logging!")

return df

The log module provides functions compatible with the Python glog package. For full reference of the ascend.log module, please see the reference.

The logger stores references to the labels required as part of thread local variables. By default, logs from the Spark driver will be collected but from the executors will not. It is possible to propagate the labels to executors, by using get_log_label, set_log_label, and threading the label through. However, logging from Spark executors may be better suited to appending a result column to the record outputs to keep log volume constrained.

Ascend Log Module Reference

| Reference | Description | Example |

|---|---|---|

| debug | Function to log with level DEBUG | debug("debug statement") |

| info | Function to log with level INFO | info("info statement") |

| warning | Function to log with level WARNING | warning("warning statement") |

| warn | Function to log with level WARNING | warn("warning statement") |

| error | Function to log with level ERROR | error("error statement") |

| exception | Function to log with level EXCEPTION | exception("exception statement") |

| fatal | Function to log with level FATAL | fatal("fatal statement") |

| log | Function to log that takes the first argument of logging level and the second of the log message. Log levels are found in the native Python logging module. | log(logging.INFO, "info statement") |

| setLevel | Function to set the severity threshold of messages to emit. Logs with a severity higher than this threshold will be emitted. Log levels are found in the native Python logging module. | setLevel(logging.INFO) |

Logs in Blob Storage

Ascend also writes logs to the default bucket for your environment. Currently, logs are uploaded every minute. However, some delay can happen between when the log is produced and when it is uploaded. Logs are available in any blob storage service.

Global Logs

To find the global log information about a given job, check the logs for the same cluster_id where job=none in the time range of interest. The bucket path looks like this:

logs/spark/year=<year>/month=<month>/day=<day>/cluster_id=<spark_cluster_id>/.

Ex.: gs://ascend-io-demo-gcp-default/logs/spark/year=2022/month=08/day=18/cluster_id=sparkworker-1660782753-4d171028/job_id=none/exec-1_2022-08-18-00-30_0.json.gz

Note:

JSON objects are in a single line. We've expanded the two examples below so we can describe the log message.

{

"log": "[72.034s][info ][gc ] GC(37) Concurrent Cycle 174.831ms",

"pod_id": "sparkworker-1660782753-4d171028",

"job_id": "none",

"role": "driver",

"level": "UNKNOWN",

"timestamp": "2022-08-18T00:33:47.994+0000",

"cluster_id": "sparkworker-1660782753-4d171028"

}

| Key | Description |

|---|---|

| Log | The original log message. |

| pod_id | Kubernetes pod from the logs |

| job_id | Object path. For global logs, this always has a value of "none". |

| role | |

| level | If the log is parseable, this will be the level it's parsed to. |

| timestamp | The timestamp assigned to the log when it arrived at the collector. |

| cluster_id | This is the ID of the Spark cluster. It's also the prefix of the pod_id. |

Specific Job Logs

Specific job logs have an additional keys that are specific to a component. The bucket path looks like this:

logs/spark/year=<year>/month=<month>/day=<day>/cluster_id=<spark_cluster_id>/job_id=<job_id>/exec-<timestamp>.

Ex. gs://ascend-io-demo-gcp-default/logs/spark/year=2022/month=08/day=18/cluster_id=sparkworker-1660782753-4d171028/job_id=5d45774a-2fd6-4dae-8e29-81af7d44483c/driver_2022-08-18-00-30_0.json.gz

{

"log": "22/08/18 00:33:33 WARN udf_1f8bc55eba5f49f5bb02d9f381838668.py:4 example udf log",

"pod_id": "sparkworker-1660782753-4d171028-exec-1",

"job_id": "5d45774a-2fd6-4dae-8e29-81af7d44483c",

"role": "exec-1",

"level": "WARN",

"timestamp": "2022-08-18T00:33:34.975+0000",

"cluster_id": "sparkworker-1660782753-4d171028",

"data_service_id": "_ascend",

"dataflow_id": "test_graph_deploy_1660157043467288064",

"component_id": "PySpark_Reduction_with_Inner_Join"

}

Job logs have the same JSON keys as global logs with the following differences and additions:

| Key | Description |

|---|---|

| job_id | Unlike global logs, this key will have a specific value. |

| data_service_id | Data Service ID name. |

| dataflow_id | Dataflow ID name. |

| component_id | Component ID name. |

Ascend-Hosted Environments (AWS Only)

For Ascend-hosted environments, we currently write Spark logs to an AWS S3 bucket. To access the logs, contact support to configure permissions. This requires you have an AWS account.

- First, we need the ID of the main AWS account you want to access logs from. We will take the Account ID and provision access for it.

- When access has been provisioned, we'll send you a role ARN and logs bucket name. the ARN will look like this (or similar):

arn:aws:iam::<ASCEND_ENVIRONMENT_ACCOUNT_ID>:role/ascend-io-<subdomain>-logs-read

Once permissions are configured, you can use any method of AWS role assumption to access the logs. An easy way is to add an entry to your AWS Config file like below, where role_arn is the ARN we provided you. Once that profile entry is added you should be able to use aws s3 commands to list, and copy files out of the bucket.

[profile aws-demo-logs]

region = <ASCEND_ENVIRONMENT_REGION>

role_arn = arn:aws:iam::<ASCEND_ENVIRONMENT_ACCOUNT_ID>:role/<ASCEND_LOGS_ACCESS_ROLE_NAME>

source_profile = <YOUR_SOURCE_PROFILE>

For enterprise security customers in AWS and Azure, there are origin-based access controls on all buckets (including the logs bucket). In order to access logs from the bucket, please send a request to Ascend with the IPs (or in AWS VPC Endpoints) that you would like to permit access from.

Updated 8 months ago