Querying from DataGrip

Using Ascend's JDBC / ODBC Connection, developers can query Ascend directly from SQL tools like DataGrip.

Although this article will go through the setup for DataGrip, the steps should be very similar to other tools.

Connecting To DataGrip

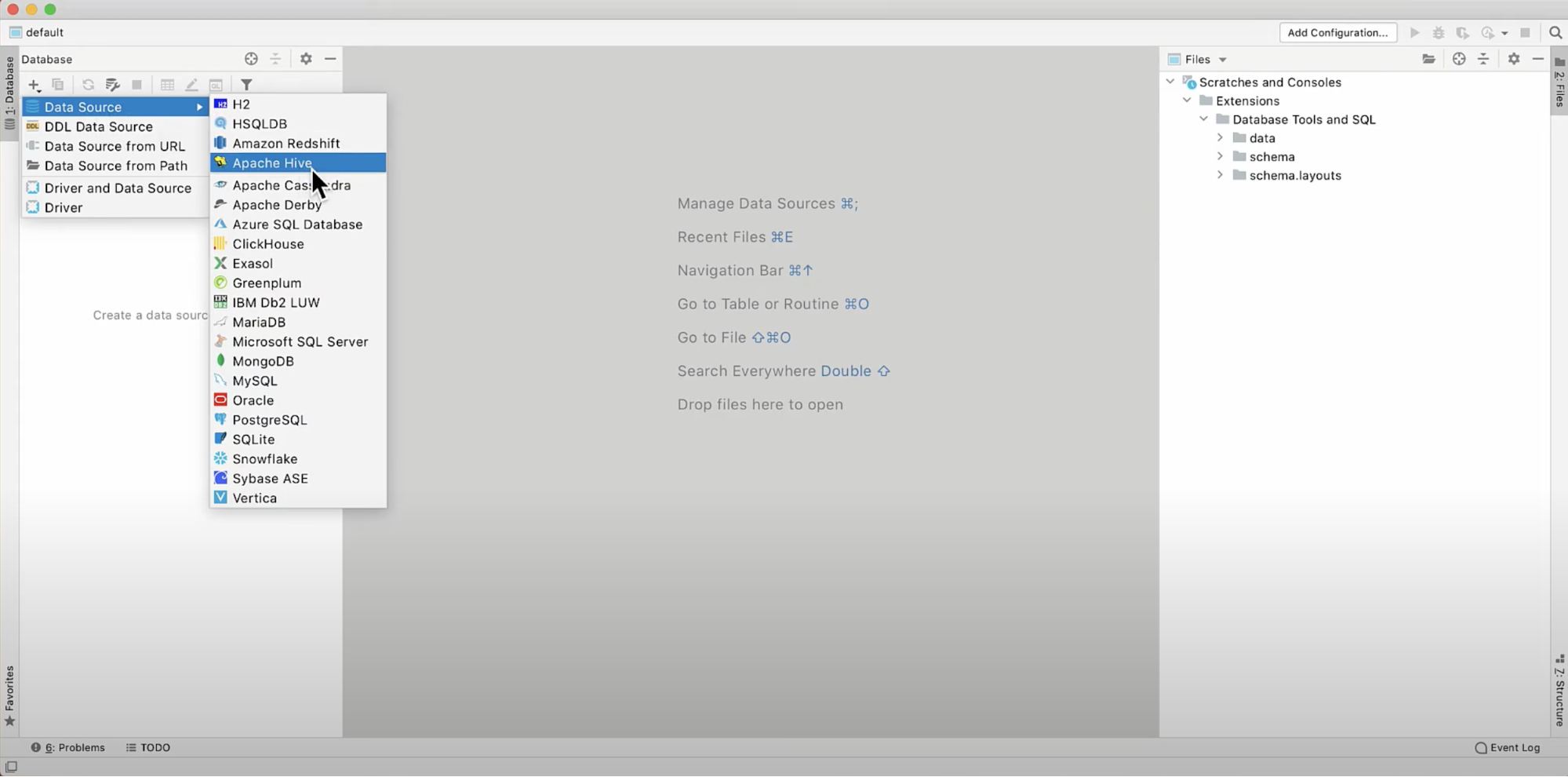

- Create a new database. For the data source type, prefer Apache Spark if present, otherwise use Apache Hive.

Using the Apache Hive DriverThe Apache Hive Driver that ships with DataGrip is version 3 and Spark is only compatible up to version 2.3.7. If you encounter difficult connecting, you will likely need to download the Hive Standalone JAR for 2.3.7 from Maven Central and use that JAR instead of the one embedded in DataGrip.

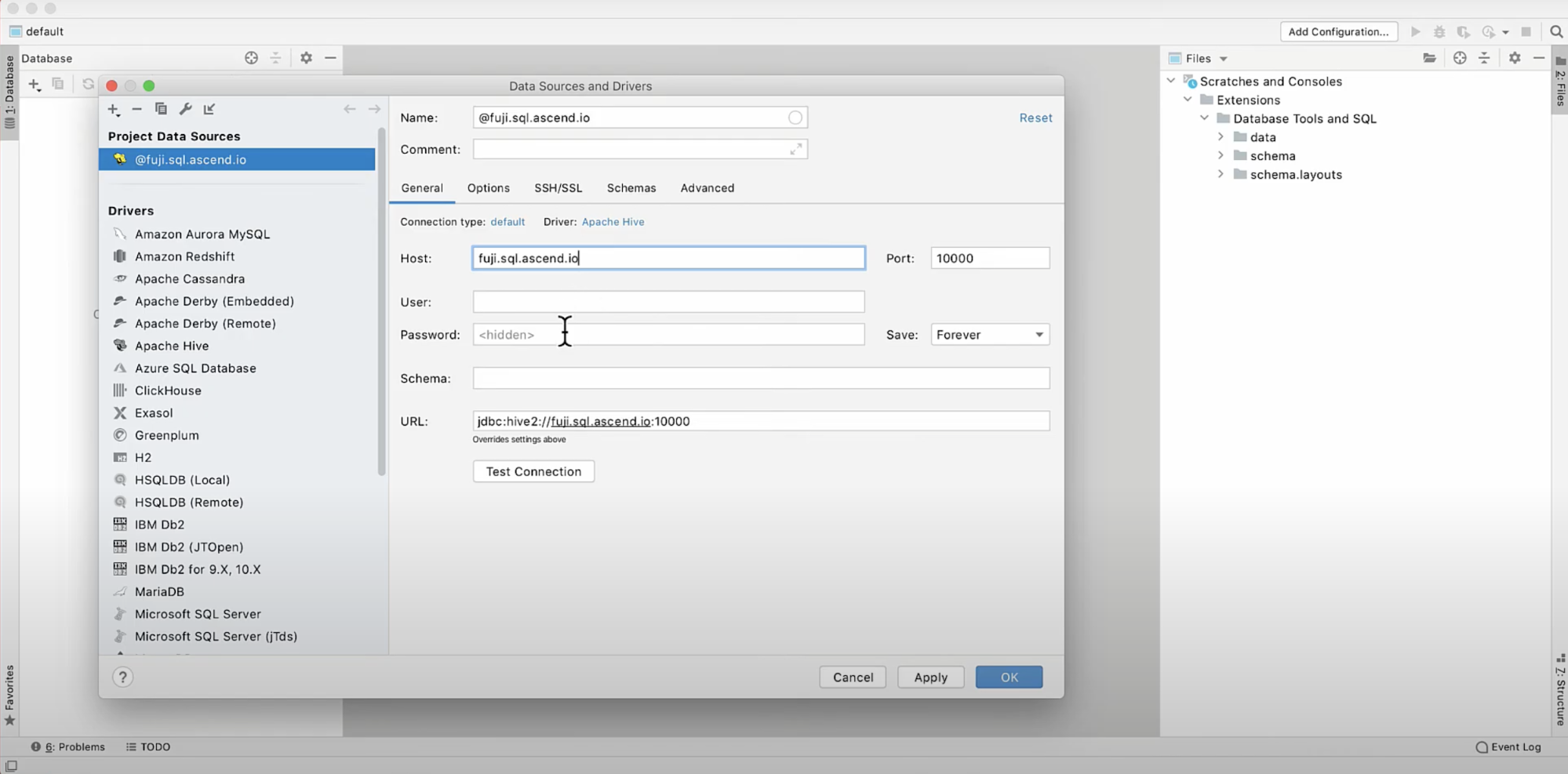

- Fill in the host with your Ascend domain in the format

<ascend-subdomain>.sql.ascend.io. - Fill in the port as

10000

- Enter the Username and Password from an API Token linked to a Service Account.

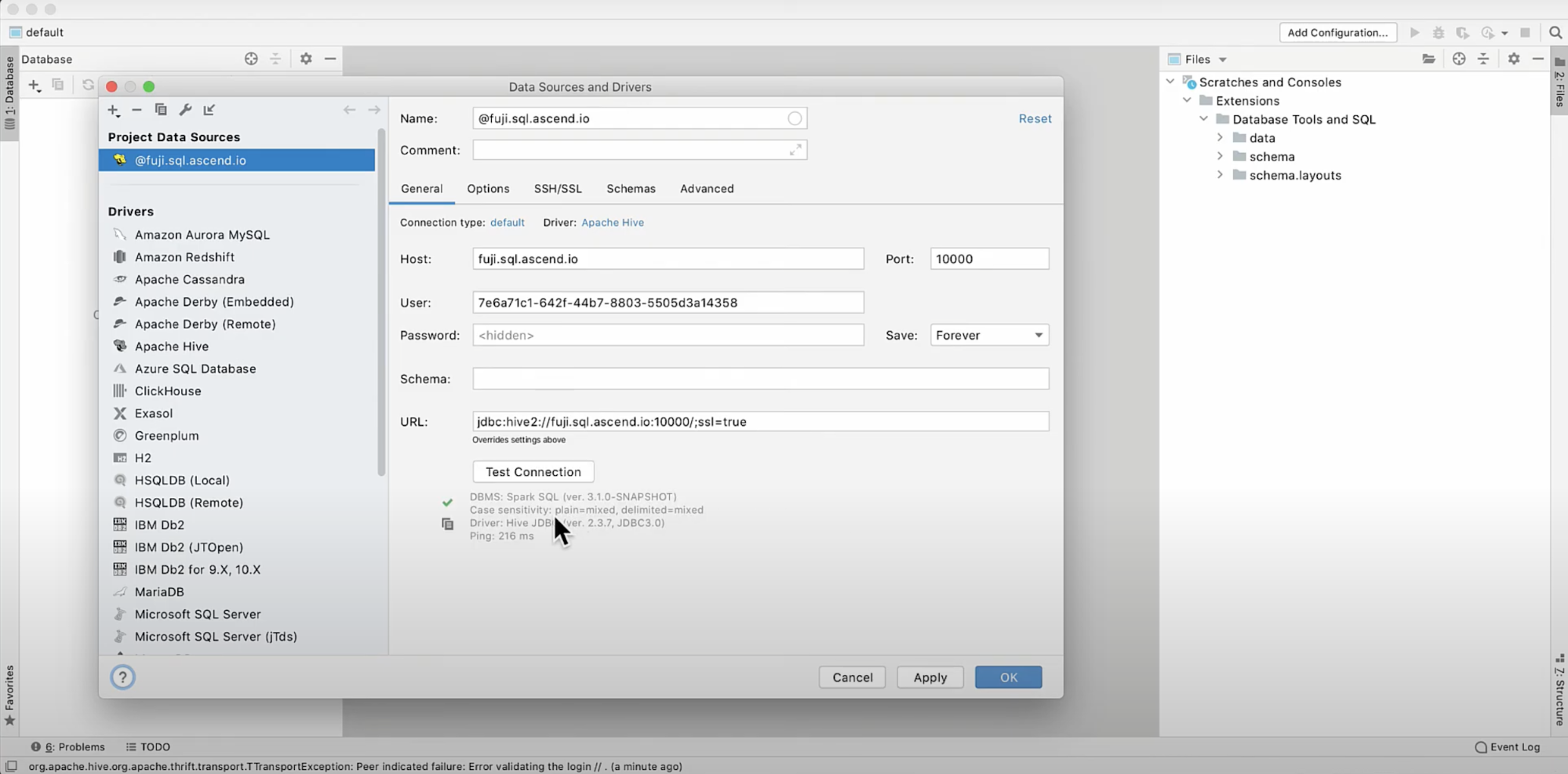

- Append

ssl=trueto the URL.

- Test the connection to ensure the setup is correct.

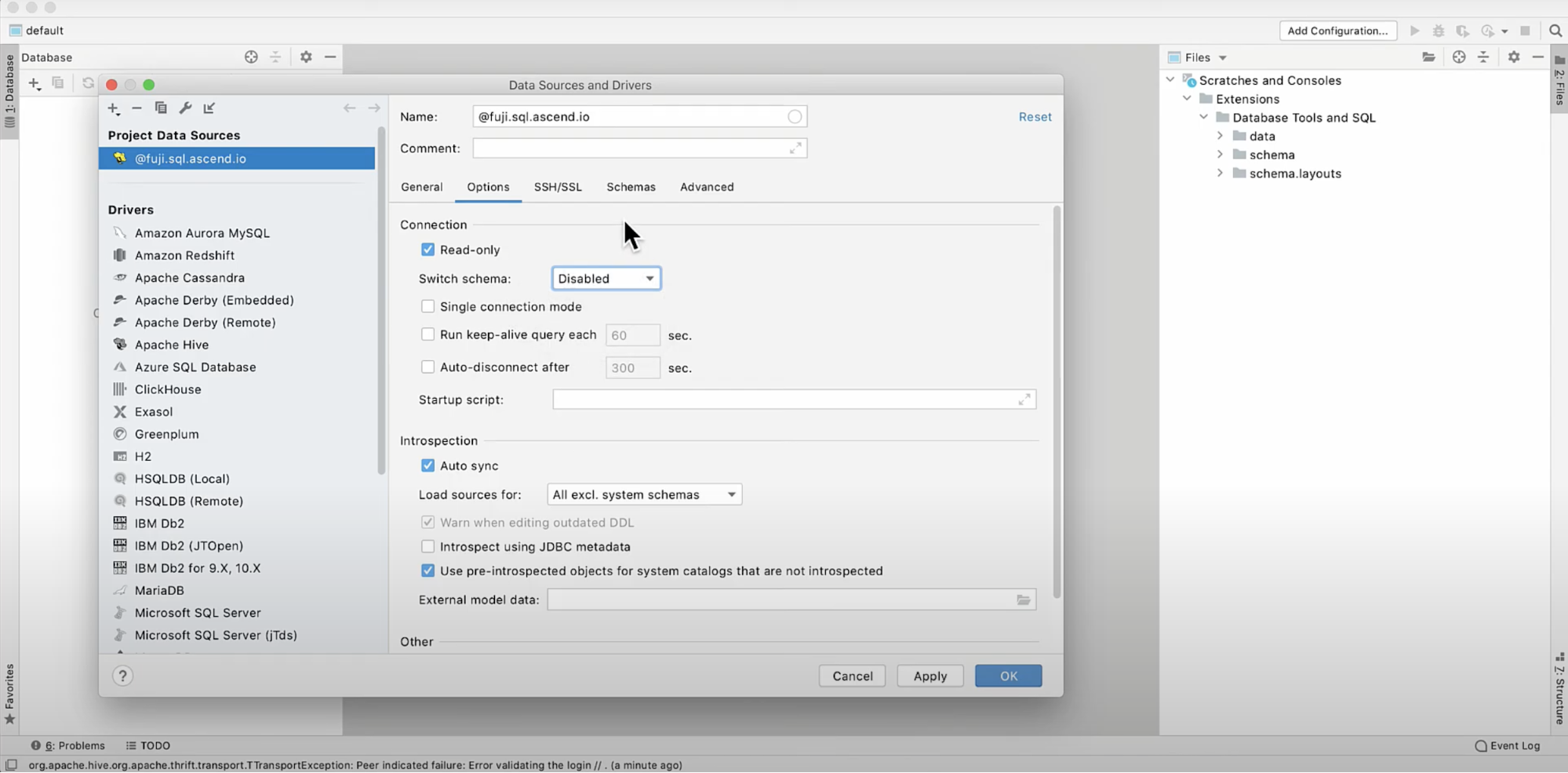

- Switch to the Options tab to enable the connection as "Read-only".

- Disable automatic schema switching.

- Create the database.

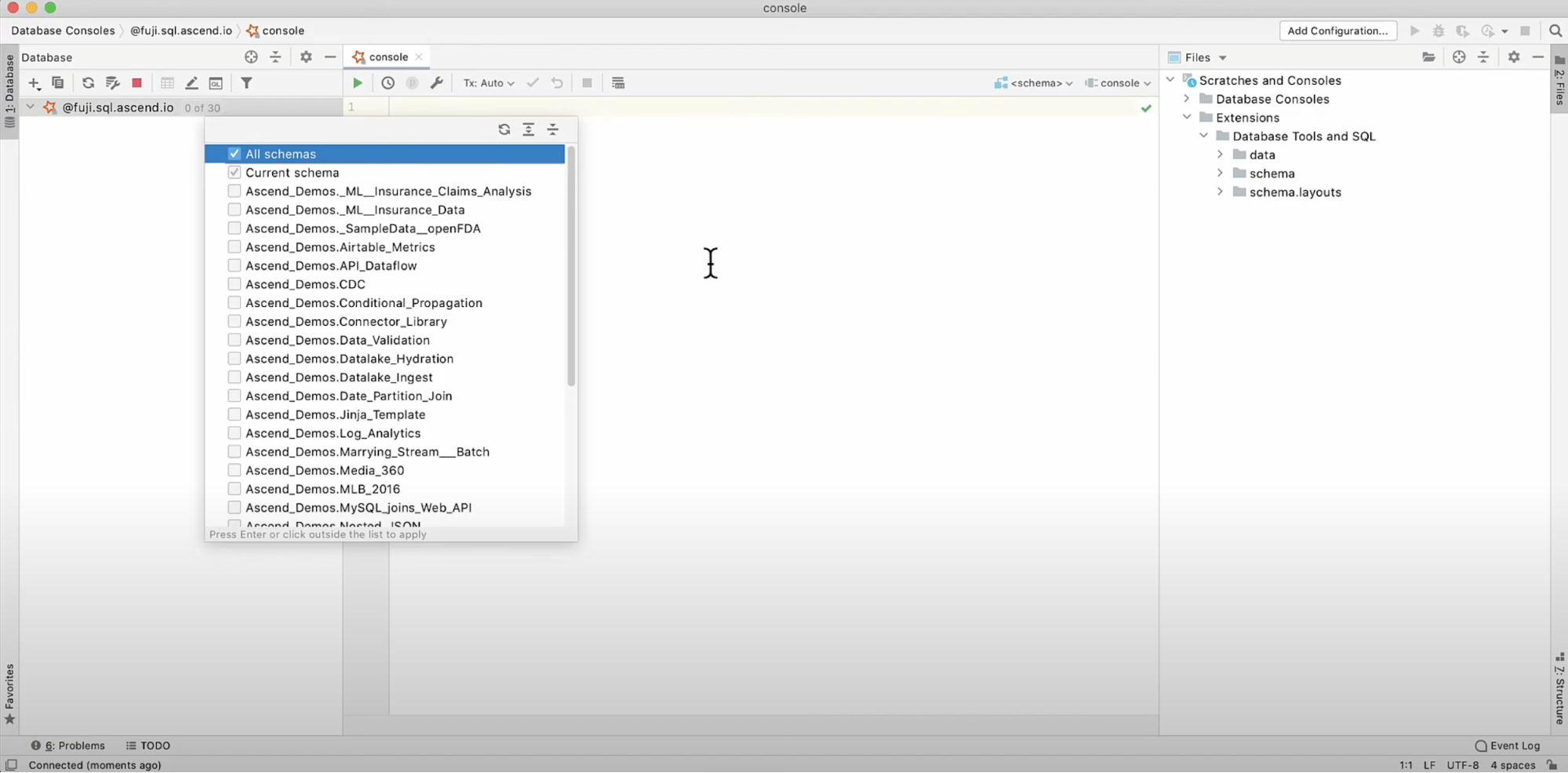

- By default DataGrip does not include any schemas. Click the database connection, select “All schemas”, then hit the "Refresh" icon.

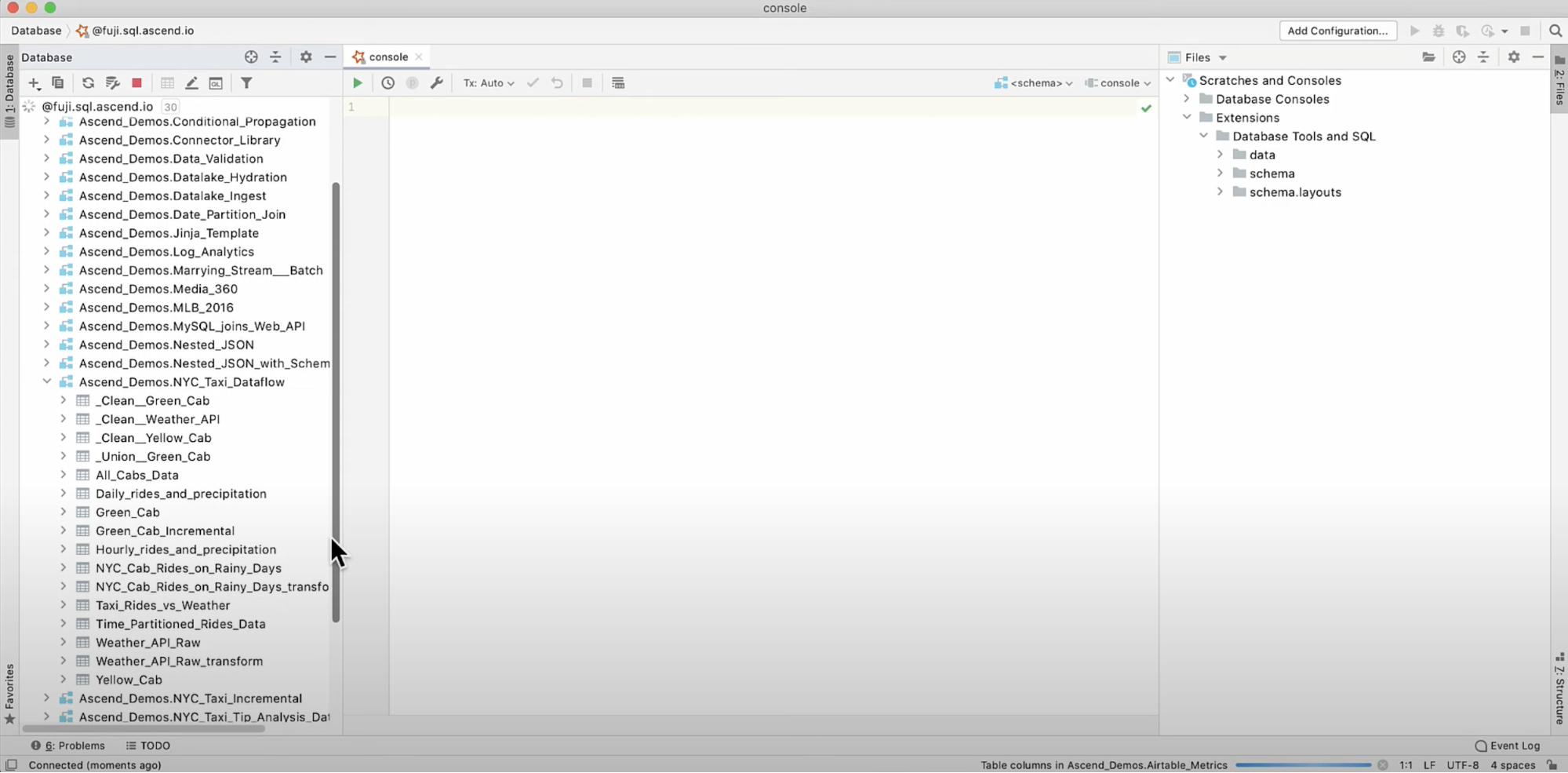

- The DataGrip catalog should be filled in, with each Dataflow should be present as a different schema with each component mapped as a table.

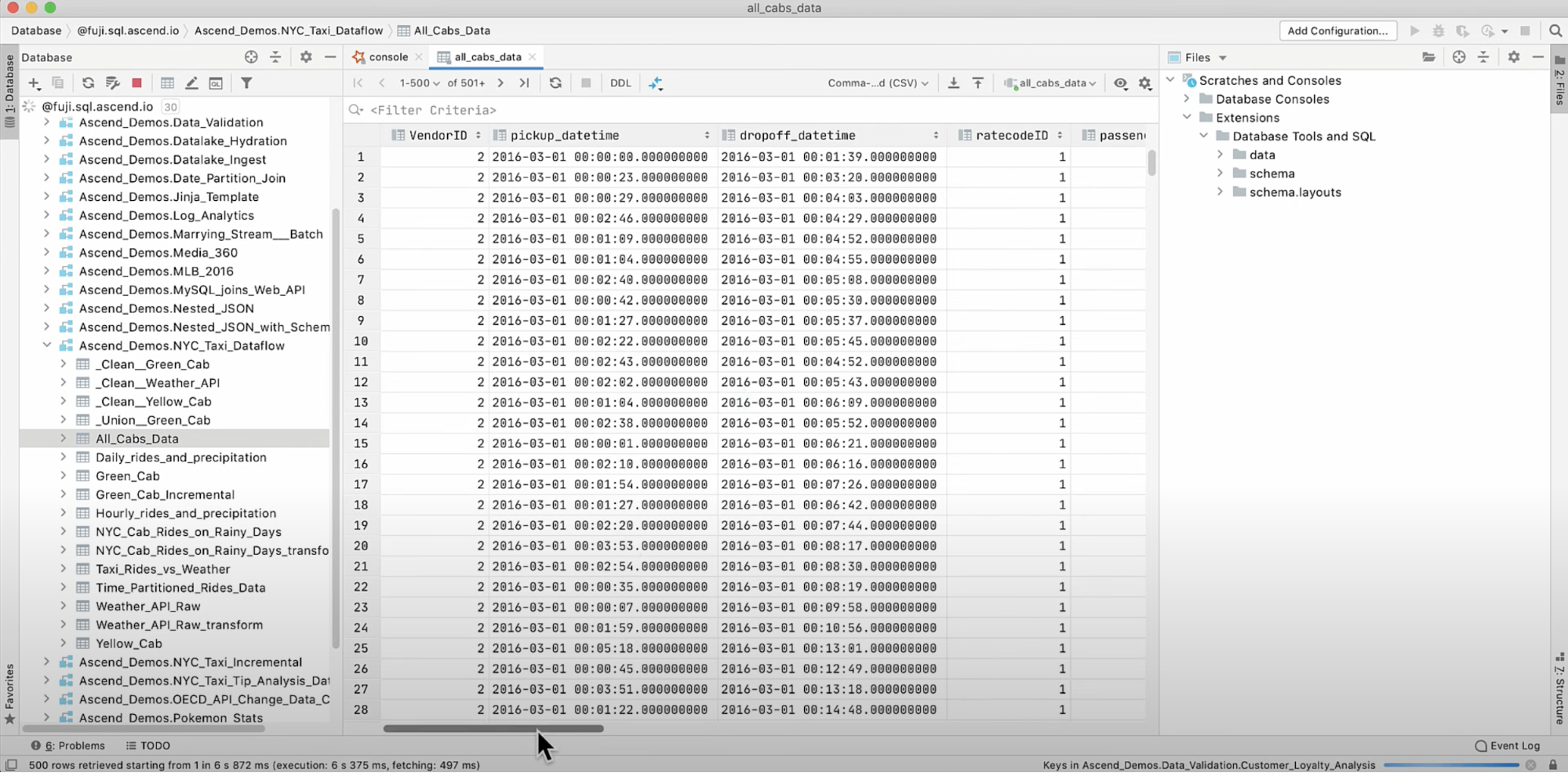

- Click on a table to view the records from that component.

This style of working makes it really easy to explore our data as we're building our pipelines. The SQL syntax is standard Spark SQL so we are free to use the full capabilities of the language.

Updated 8 months ago