Create a Weather Domain

Here, we'll work through creating our second data product, Weather Domain.

To create a Weather Domain, we'll first create a Read Connector for WEATHER_API_RAW and WEATHER_STATIONS and clean the data with Snowflake SQL Transforms. Then we'll combine the cleaned data.

Step 1: Creating a Weather Data Read Connectors

For the Weather Domain, we'll skip creating a new Connection since Connections are accessible throughout the Data Service.

Create a WEATHER_API_RAW Read Connector

Select USE on the Sample Datasets S3 Connection and select Skip and configure manually. Enter the following parameters:

WEATHER_API_RAWMatchweather/api_data.csv| Field | Value |

|---|---|

| Parser | CSV |

| Fields Have a Header Row | Check this box. |

Leave the remaining fields unconfigured. Select GENERATE SCHEMA to see a preview of the CSV to confirm everything looks accurate. Ascend will automatically parse the header along with the first few lines of data to generate the schema and allow you to inspect it.

Once schema is generated, select CREATE. Ascend will begin ingesting the green cab data from the S3 bucket. Once the data is ingested, the Read Connector will show UP TO DATE.

Create WEATHER_STATIONS Read Connector

Select USE on the Sample Datasets S3 Connection and select Skip and configure manually. Enter the following parameters:

WEATHER_STATIONSPrefixstations/| Field | Value |

|---|---|

| Parser | CSV |

| Fields Have a Header Row | Check this box. |

Select GENERATE SCHEMA to see a preview of the CSV to confirm everything looks accurate. Ascend will automatically parse the header along with the first few lines of data to generate the schema and allow you to inspect it. You can also preview the schema information to verify accuracy.

Step 2: Cleaning and Combining Data with Transforms

Now that data is ingested into Ascend, the data needs to be parsed, cleaned and combined.

Create a PARSED_WEATHER_DATA Transform

Create a new Transform Connector from the WEATHER_API_RAW Read Connector by left-selecting the vertical ellipsis and selecting Create new Transform.

Name the transform "PARSED_WEATHER_DATA" and paste the following code into the query editor:

PARSED_WEATHER_DATASELECT

TO_DATE(GET(w.PARSED, 'date')) as DATE,

GET(w.PARSED, 'datatype') as DATATYPE,

GET(w.PARSED, 'station') as STATION,

TO_DECIMAL(GET(w.PARSED, 'value')) as VALUE,

'weather' as WEATHER_TYPE

FROM

(SELECT PARSE_JSON(w.HTTP_RESPONSE) as PARSED FROM {{WEATHER_API_RAW}} AS w) AS wSELECT

parsed.date as DATE,

parsed.datatype as DATATYPE,

parsed.station as STATION,

parsed.value as VALUE,

'weather' as WEATHER_TYPE

FROM

(SELECT FROM_JSON(HTTP_RESPONSE, "date date, datatype string, station string, attributes string, value decimal") as parsed FROM {{WEATHER_API_RAW}})SELECT

DATE(STRING((w.PARSED.date))) as DATE,

STRING(w.PARSED.datatype) as DATATYPE,

STRING(w.PARSED.station) as STATION,

FLOAT64(w.PARSED.value) as VALUE,

'weather' as WEATHER_TYPE

FROM

(SELECT SAFE.PARSE_JSON(w.HTTP_RESPONSE) as PARSED FROM {{WEATHER_API_RAW}} AS w) AS wCreate a CLEAN_WEATHER_DATA Transform

Create another transform from the PARSED_WEATHER_DATA Transform to align the fields to .

Name the Transform "CLEAN_WEATHER_DATA" and paste the following code into the query editor:

CLEAN_WEATHER_DATASELECT

ADD_MONTHS(DATEADD(day, UNIFORM(0,31, RANDOM()), w.DATE), -48) as WEATHER_DATE,

w.DATATYPE,

w.STATION,

CASE

WHEN SUM(w.VALUE) >= 2.5 THEN 'Rainy'

ELSE 'Not Rainy'

END as WEATHER,

SUM(w.value) as PRECIPITATION

FROM

{{PARSED_WEATHER_DATA}} AS w

WHERE

w.DATATYPE = 'PRCP'

GROUP BY

w.DATATYPE,

w.STATION,

WEATHER_DATESELECT

ADD_MONTHS(DATEADD(day, CAST(RAND()*31 AS INT), w.DATE), -48) as WEATHER_DATE,

w.DATATYPE,

w.STATION,

CASE

WHEN SUM(w.VALUE) >= 2.5 THEN 'Rainy'

ELSE 'Not Rainy'

END as WEATHER,

SUM(w.value) as PRECIPITATION

FROM

{{PARSED_WEATHER_DATA}} AS w

WHERE

w.DATATYPE = 'PRCP'

GROUP BY

w.DATATYPE,

w.STATION,

WEATHER_DATESELECT

DATE_SUB(DATE_ADD(w.DATE, INTERVAL CAST(RAND() * 31 AS INT64) DAY), INTERVAL 4 YEAR) as WEATHER_DATE,

w.DATATYPE,

w.STATION,

CASE

WHEN SUM(w.VALUE) >= 2.5 THEN 'Rainy'

ELSE 'Not Rainy'

END as WEATHER,

SUM(w.value) as PRECIPITATION

FROM

{{PARSED_WEATHER_DATA}} AS w

WHERE

w.DATATYPE = 'PRCP'

GROUP BY

w.DATATYPE,

w.STATION,

WEATHER_DATECombine the Weather and Station Data

Now that we have station data and clean weather data, we'll create the data with a Transform using Snowflake SQL.

Create a new Transform Connector from the CLEAN_WEATHER_DATA Read Connector by left-selecting the vertical elipsis and selecting Create new Transform.

Name the transform "WEATHER_AND_STATION" and paste the following code into the query editor:

WEATHER_AND_STATIONSELECT

w.*,

NVL(ws.name,'Unknown Station') as station_name,

ws.latitude as station_latitude,

ws.longitude as station_longitude

FROM

{{CLEAN_WEATHER_DATA}} w

LEFT JOIN {{WEATHER_STATIONS}} ws ON w.station = CONCAT('GHCND:',ws.id)SELECT

w.*,

NVL(ws.name,'Unknown Station') as station_name,

ws.latitude as station_latitude,

ws.longitude as station_longitude

FROM

{{CLEAN_WEATHER_DATA}} w

LEFT JOIN {{WEATHER_STATIONS}} ws ON w.station = CONCAT('GHCND:',ws.id)SELECT

w.*,

IFNULL(ws.name,'Unknown Station') as station_name,

ws.latitude as station_latitude,

ws.longitude as station_longitude

FROM

{{CLEAN_WEATHER_DATA}} w

LEFT JOIN {{WEATHER_STATIONS}} ws ON w.station = CONCAT('GHCND:',ws.id)Step 3: Create Data Share Connectors

The final step in our Weather Domain is to create a Data Share.

Create a MESH_DOMAIN_WEATHER_DATA Data Share

Right-select the vertical ellipsis from the WEATHER_AND_STATION Transform and select Create New Data Share.

Name the Data Share MESH_DOMAIN_WEATHER_DATA. Ensure the Upstream field is set to WEATHER_AND_STATION. For Permission Settings, select "All teams in all Data Services...."

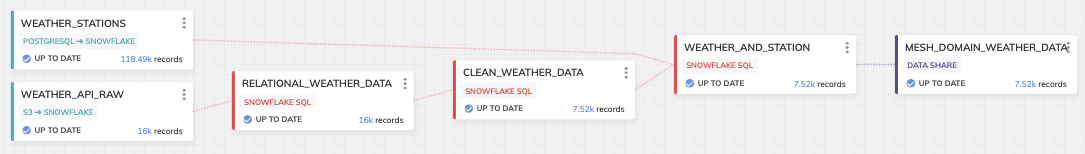

MESH_DOMAIN_WEATHER_DATAYour completed DAG should look like this:

Next Steps

Congratulations on creating a weather data domain! You've ingested weather data, transformed the data so the schema from two different sources aligns, and created a Data Share. Now that we have two data domains, you can create your first Data Mesh.

Updated 8 months ago