Google BigQuery Write Connector (Legacy)

Creating an Google BigQuery Write Connector

Prerequisites:

- Access credentials

- Data location on Google BigQuery

- Data location on Google Cloud Storage

- Partition column from upstream, if applicable

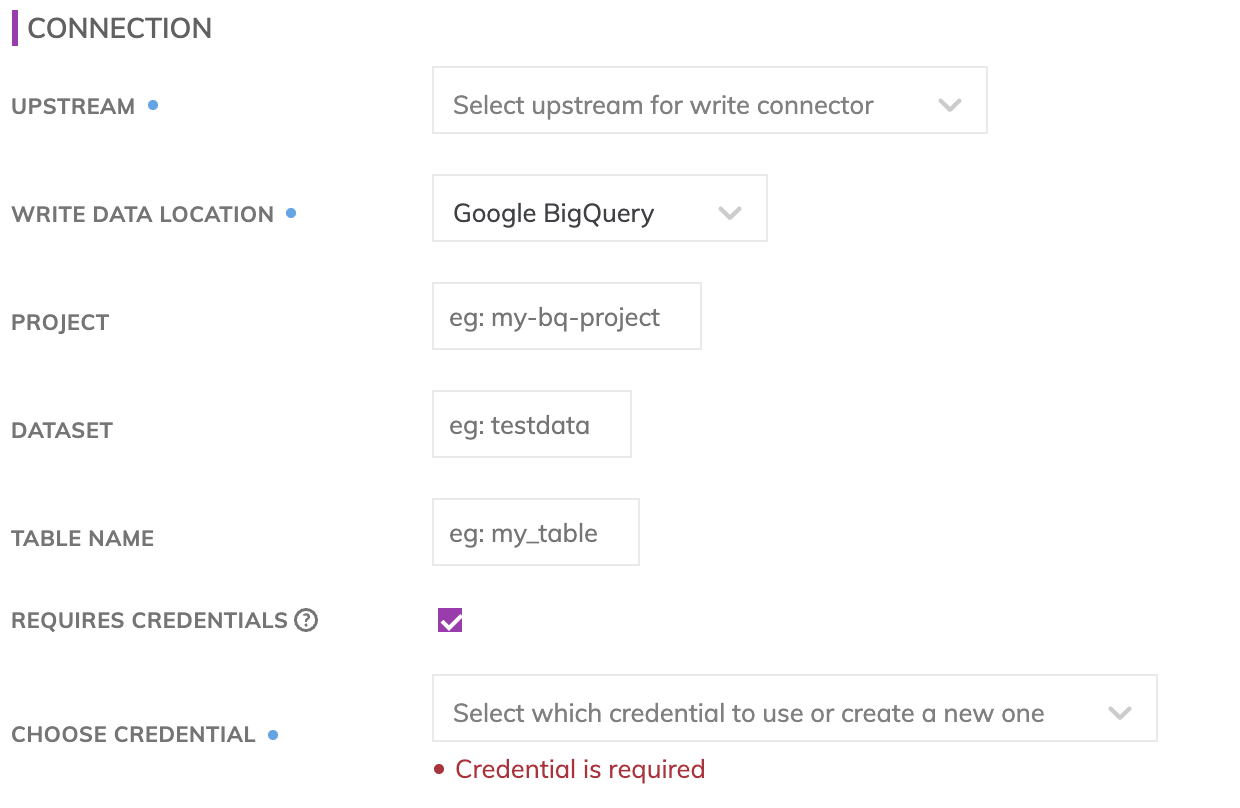

Specify the Upstream and BiqQuery dataset information

SuggestionFor optimal performance ensure the upstream transform is partitioned.

BigQuery connection details

Specify the BigQuery connection details. These includes how to connect to Google BigQuery as well as where data resides within BigQuery.

- Project: The Google Cloud Project under which the Table will be created (e.g. my_data_warehouse_project.)

- Dataset: The name of the Dataset where the BigQuery table will be created (e.g. my_very_big_dataset.) Please note: The Dataset needs to be created outside of the Ascend environment and will not be created automatically if the Dataset doesn't already exist.

- Table Name: The name of the BigQuery table. This table does not need to be created manually before creating the Write Connector. The Write Connector will automatically create this table at runtime.

- BigQuery credentials: The JSON key for the service account which has write access to the Dataset specified.

Testing BigQuery permissions

Use Test Connection to check whether all BigQuery permissions are correctly configured.

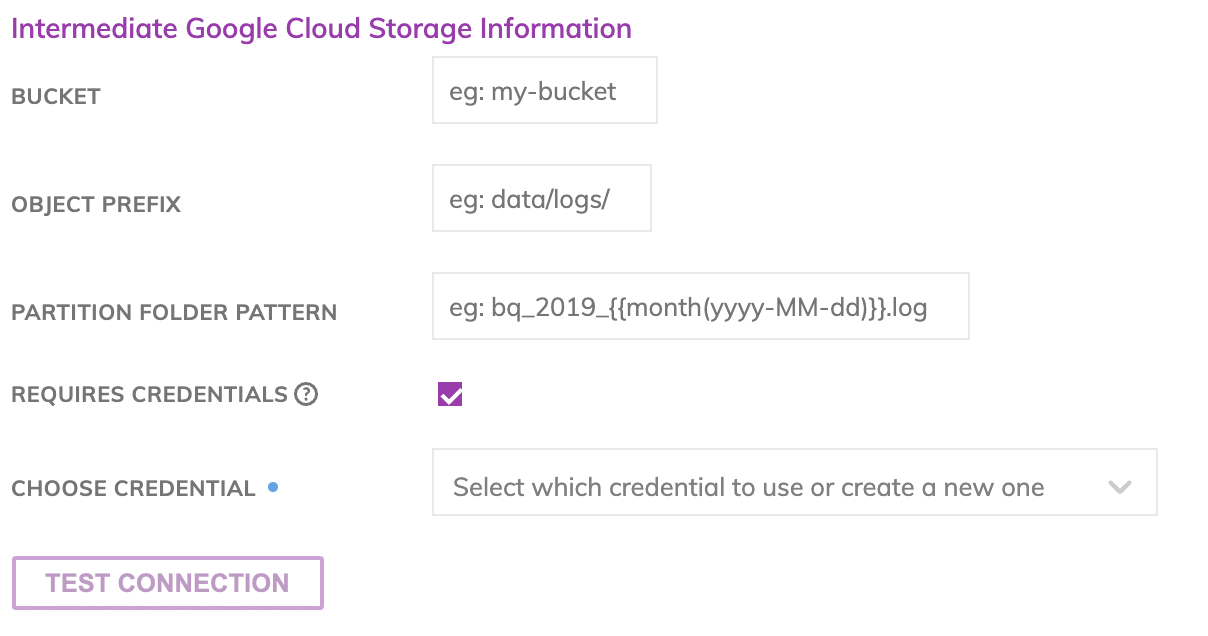

GCS data location

Google Cloud Storage (GCS) is used as a staging area prior to pushing data into BigQuery. This requires configuring a GCS write connector. Specify under the Intermediate Google Cloud Storage Information section the GCS path where the data files will be created.

- Bucket: The GCS bucket name, e.g. my_data_bucket. Do not put in any folder information in this box.

- Object Prefix: The GCS folders hierarchical prefix, e.g. good_data/my_awesome_table. Do not include any leading forward slashes.

- Partition Folder Pattern: The folder pattern that will be generated based on the values from the upstream partition column, e.g. you can use {{at_hour_ts(yyyy-MM-dd/HH)}} where column at_hour_ts is a partition column from the upstream transform.

WarningAny data that is not being propagated by the upstream transform will automatically be deleted under the object prefix.

For example; if the write connector produces three files A, B and C in the object-prefix and there was an existing file called output.txt at the same location Ascend will delete output.txt since Ascend did not generate it.

Google Cloud Storage Credentials

Enter the JSON key for the service account which has Storage Admin and Storage Object Admin for the GCS path. Refer to Google documentation for more details on GCS authentication.

Storage APIWe relied on the Google Storage API to write into GCS locations. As a result, we require the Storage API to be enabled. This should be enabled by default in the GCP but in case it's not, user can enable it by going to https://console.developers.google.com/apis/api/storage-api.googleapis.com/overview?project={your gcp id} to enable this API.

Testing Connection

Use Test Connection to check whether all GCS permissions are correctly configured.

Updated 8 months ago