Spark SQL Transforms

Creating Spark SQL Transforms

To transform your data using Spark SQL, you use the Spark SQL transform component. There are three different methods to create Spark SQL transforms outlined below.

NOTE: Spark SQL Transforms are written using standard Spark SQL syntax.

First, navigate to a specific Dataflow within a Data Service. In the screenshots below, we have navigated to a data flow named "My Dataflow".

The most obvious way to create a transform is to create it from the "Build" menu (Method 1). Ascend also offers two convenient shortcut methods (Method 2 & Method 3) for creating a Spark SQL transform during dataflow construction.

These methods take advantage of Ascend's Queryable Dataflows and become part of a quick, intuitive and iterative process of data exploration and transformation, as you build your pipeline. Once you create an initial read connector or data feed component, building a pipeline with the shortcuts methods feels like "query, transform... repeat", until you get to your desired outputs (e.g. write connectors or data feeds).

Method 1: Create from the Build Menu

- Within the Dataflow, click the Build tab from the menu on the left side of the screen.

- From the Transform section, click the Spark SQL transform component.

This will open the CREATE NEW TRANSFORM panel, which is described below.

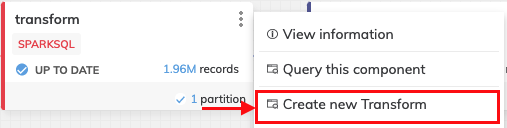

Method 2: Create from a Component

Right click a component from the canvas of a dataflow (such as a read connector or transform), or click the "three dot" menu on the component and select "Create new Transform".

This will open the CREATE NEW TRANSFORM panel, which is described below.

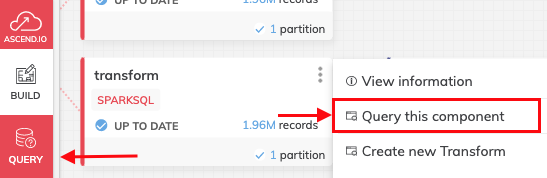

Method 3: Create from a Query

You can create a Query by using the "Query Tab" on the left-side menu bar. You can also right click a component (such as a read connector or transform), or click the "three dot" menu, and selecting "Query this component".

Once the COMPOSE NEW QUERY window pops up, you can work iteratively on your query to perform data exploration and get your transformation logic "just right". When you're ready, just click the TO TRANSFORM button at the bottom of the COMPOSE NEW QUERY window. Once you do, you'll see the CREATE NEW TRANSFORM panel below, pre-populated with your query!

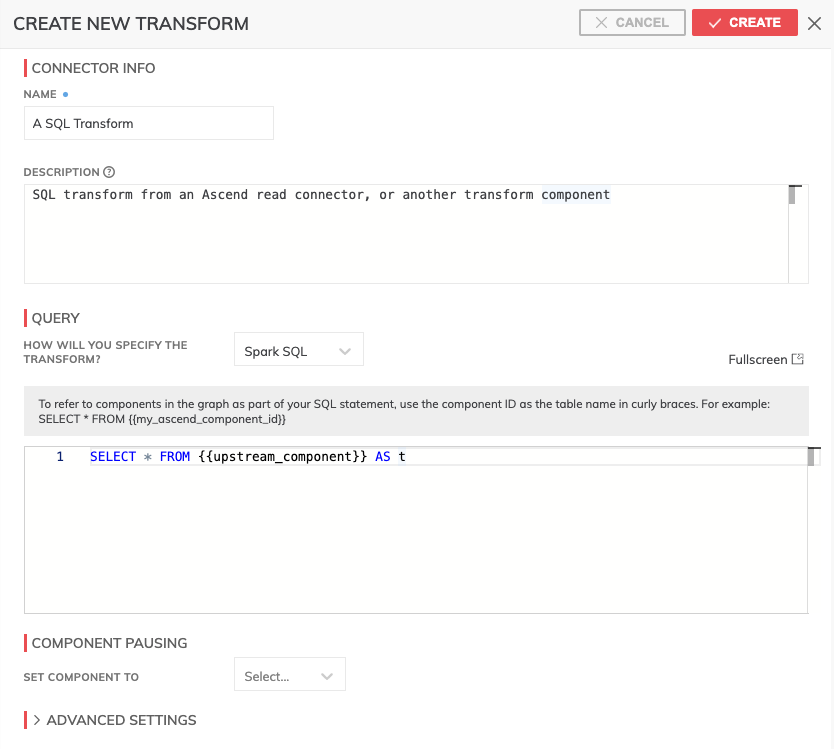

Create a New Transform

Once you are in the CREATE NEW TRANSFORM panel, depending on which method you chose from the three mentioned above, you will see the below.

- Name: the name of the Spark SQL transform component

- Description: enter text here that describes what the transform does, or any other metadata that is helpful to you, your team, or your organization. NOTE: You can use Markdown within these descriptions as well!

- Transform Language: this field asks you, "How will you specify the transform?". The language will already be set to Spark SQL based on how you navigated to the component, but you can see the other options available by choosing from the dropdown box.

- Query: a code editor in which you write the Spark SQL code expression you want to use to transform data. The editor offers syntax highlighting and code completion. Additionally, you must surround the name of the components used in the query using double curly braces - for example, {{my_component_name}}. Click "Fullscreen" (top right of the code editor) to go into full screen editing mode!

- Component Pausing: Running (default) or Paused. Pausing a component means it, and any components downstream from it, will not refresh/run until unpaused.

- Advanced Settings: Controls advanced features for this transform, such as choice of data partitioning strategies, inclusion of Ascend metadata columns in transform outputs, processing priority, partition union/filtering or configuration for the underlying Spark jobs.

Best Practice: Capture knowledge and documentationA good time to capture knowledge/documentation related to your components, transformations and/or code is when you're developing. This may be related to the code you wrote, or the data that flows through the component. Take advantage of the Description field and remember you can use Markdown, you never know who it will help down the line - it might even be you! 😃

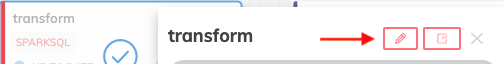

Edit an Existing Transform

Left click on a Spark SQL transform and click the "pencil" icon to edit it. The Spark SQL Transform editor panel will appear.

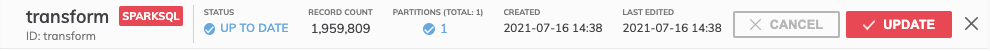

Additional metadata created during data processing will be visible in the panel's header.

- Record Count: The number of records processed by and stored in the component.

- Partitions: The number of physical partitions the data is stored in, for this component.

- Created: Date the component was created.

- Last Edited: Date the component was last edited/updated.

Make any changes you need to the component, and when you are done, click "Update" in the top right hand corner of the panel to save the changes.

Updated 9 months ago