Blob Storage Read Connector Parsers

Of the available parsers for some Read Connectors, the following have additional required or optional properties.

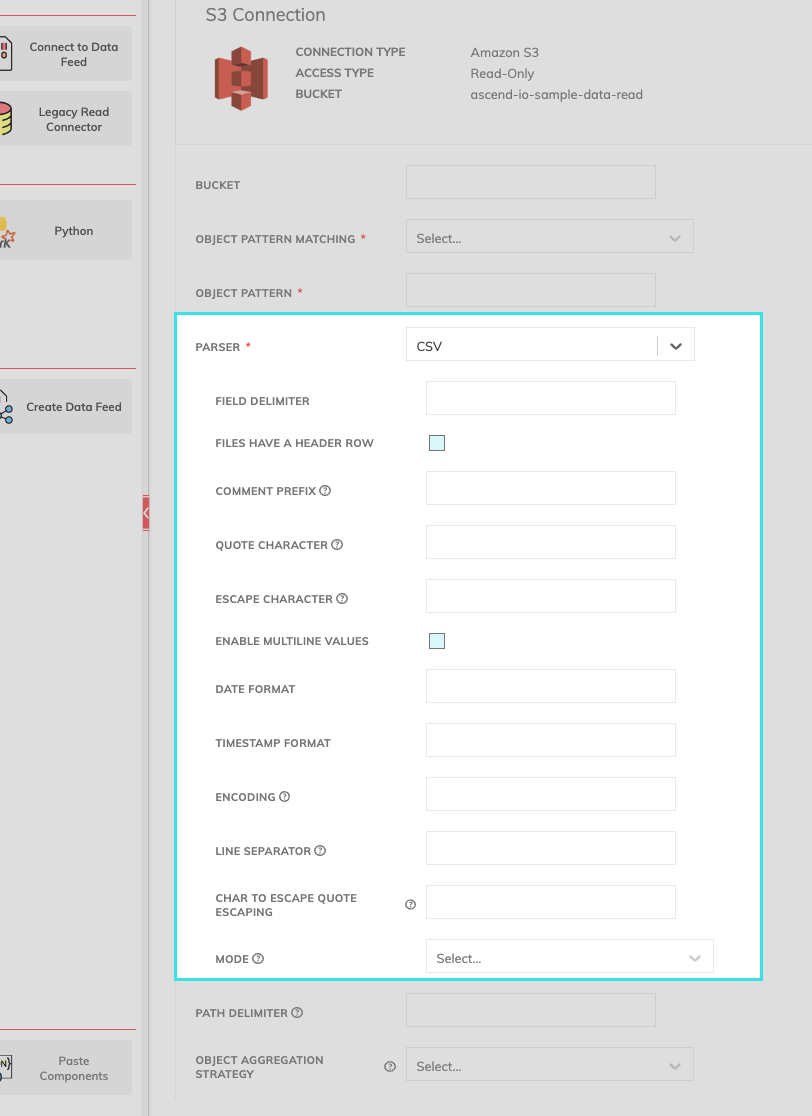

CSV Parser

The CSV parser provides a range of customizable parameters. Each parser for a Read Connector has specific parameters.

CSV Parser Fields

All fields available for the CSV parser are optional. We'll use the following text example to discuss each field:

@Album released in 1967

artist,album,track,verse

The Beatles, Magical Mystery Tour, "Hello, Goodbye", "You say, /"Yes/", I say, /"No/" !/I say, /"Yes/",

but I may mean, /"No/"!/"Field | Description |

|---|---|

FIELD DELIMITER | Field delimiter to be used. Ex. Delimiter = |

FILES HAVE A HEADER ROW | Tells the parser that the first line should be used as column names in the schema. Selected by default. Deselect if the first row does not contain column names. Ex. Header Row = |

COMMENT PREFIX | A single character/symbol used for skipping lines beginning with this character. By default, this is disabled. Ex. @Album release in 1967 |

QUOTE CHARACTER | A single character used for escaping quoted values where the separator can be part of the value. If you would like to turn off quotations, you need to set the value to an empty string. Ex. track = "Hello, Goodbye" |

ESCAPE CHARACTER | A single character used for escaping quotes inside an already quoted value. Ex. verse1= "You say, /"Yes/", I say, /"No/" !/I say, /"Yes/", |

ENABLE MULTILINE VARIABLES | This checkbox informs the parser that there can be multi-line fields in the ingested data. In most CSV files, each record stands on its own line, with each field separated by a comma. But sometimes we have a multi-line field. Ex. verse1= "You say, /"Yes/", I say, /"No/" !/I say, /"Yes/", |

DATE FORMAT | Format of the date type field in the CSV file. See Datetime Patterns for Formatting and Parsing. |

TIMESTAMP FORMAT | Format of the timestamp type field in the CSV file. Specify the timestamp format if known. See Datetime Patterns for Formatting and Parsing. |

ENCODING | Decode CSV files by the given encoding type. Defaults to UTF-8 (this is the most common encoding type). One known exception is files created on Windows, which requires |

LINE SEPARATOR | Defines the line separator that should be used for parsing. Defaults to |

CHAR TO ESCAPE QUOTE ESCAPING | Sets a single character used for escaping the escape for the quote character. Ex. verse1= "You say, /"Yes/", I say, /"No/" !/I say, /"Yes/", |

MODE | Allows a mode for dealing with corrupt records during parsing. Mode options include PERMISSIVE, DROPMALFORMED, and FAILFAST.

|

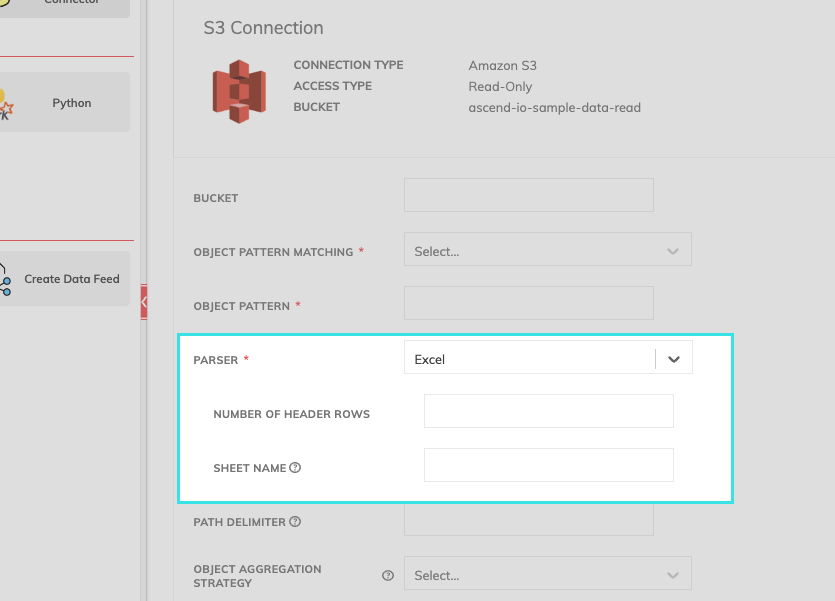

Excel Parser

All fields for the Excel parser are optional.

Excel Parser Fields

| Field | Description |

|---|---|

| NUMBER OF HEADER ROWS | Indicates the number of header rows used in the sheet used for parsing. If no number of rows is indicated, Ascend assumes the first row is the header row. |

| SHEET NAME | Indicates the name of the sheet to use. If no sheet is selected, the first sheet of the Excel file will be used. |

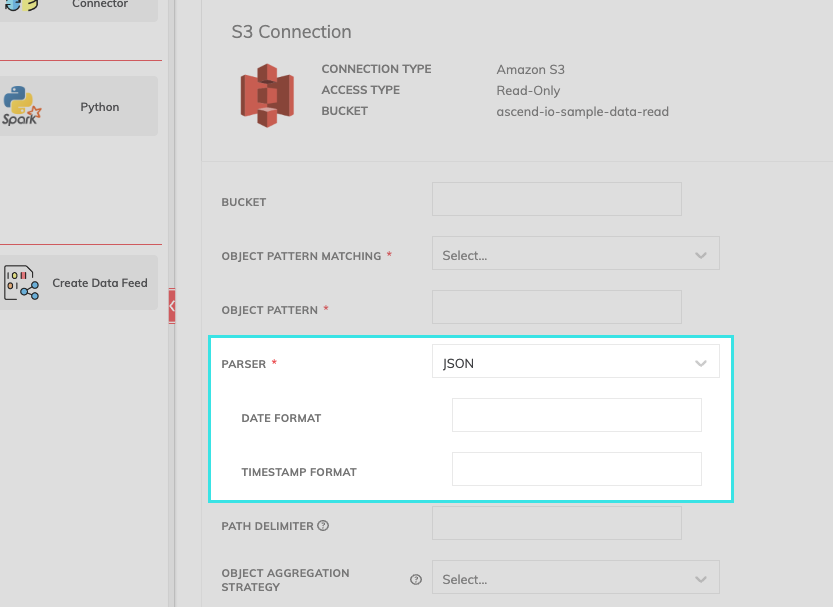

JSON Parser

All fields for the JSON parser are optional.

JSON Parser Fields

| Field | Required |

|---|---|

| DATA FORMAT | Format of the date type field in the CSV file. See Datetime Patterns for Formatting and Parsing. |

| TIMESTAMP FORMAT | Format of the timestamp type field in the CSV file. Specify the timestamp format if known. See Datetime Patterns for Formatting and Parsing. |

| ENCODING | Specify the encoding for json file. The default encoding is utf-8 |

| LINE SEPARATOR | Define the line separator that should be used for parsing. When multiline is disabled, this field is required for non utf-8 encoded input files |

| ENABLE MULTILINE VALUES | Parse one record, which may span multiple lines, per file. If it is enabled, the file is processed as a whole instead of being parallelized by line. It may affect the processing time. |

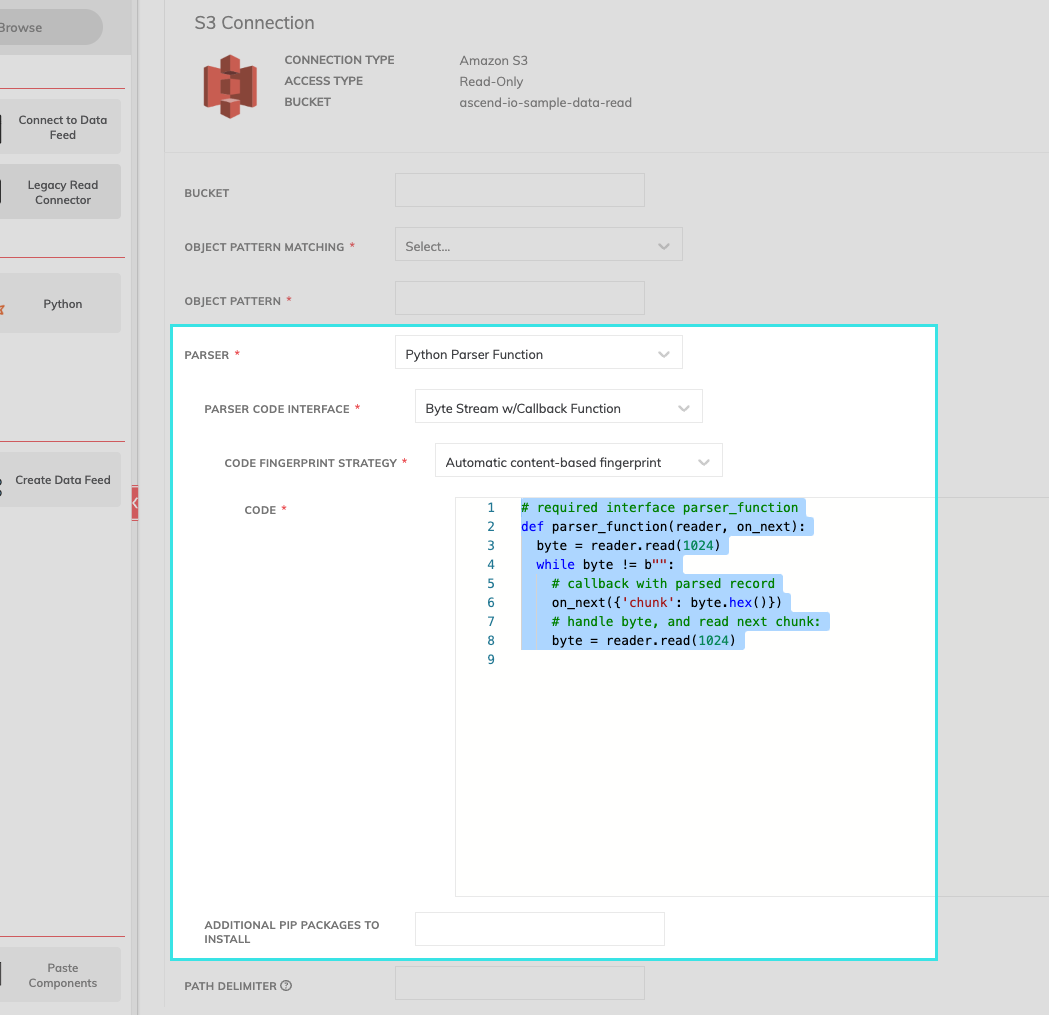

Python Parser

Python Parser Fields

| Field | Required | Description |

|---|---|---|

| PARSER CODE INTERFACE | Required | Current availability is Byte Stream with Callback Function |

| CODE FINGERPRINT STRATEGY | Required | Current availability is Automatic content-based fingerprint |

| CODE | Required | See below for a code sample highlighting the required parameters. |

| ADDITIONAL PIP PACKAGES TO INSTALL | Optional | Indicate if there are any additional PIP packages. |

Below is a code sample included within Ascend's Python Parser Function.

# required interface parser_function

def parser_function(reader, on_next):

byte = reader.read(1024)

while byte != b"":

# callback with parsed record

# in this case, 1024 bytes per 'chunk' encoded in hexidecimal

on_next({'chunk': byte.hex()})

# read next chunk:

byte = reader.read(1024)Updated 8 months ago