Quickstart Guides

Do everything from signing up for Ascend to creating your first automated data pipeline within your new Ascend account.

Introduction

In the following quickstart guides, you'll learn how to build data pipelines in Ascend and create a Data Mesh by ingesting and transforming publicly available data so that it can be analyzed. Along the way, you'll learn how to:

- Create three new Dataflows.

- Load sample data into each Dataflow.

- Transform the sample data.

- Create Data Feeds.

- Create a Data Mesh with clean data ready for analysis.

Prerequisites

- A Snowflake, Databricks, or BiqQuery account.

- An Ascend account.

Create an Ascend AccountThis tutorial requires an Ascend environment and Data Admin permissions or higher. If you do not have these permissions, contact your Ascend administrator. If you're new to Ascend, start a free trial.

Get Started

The quickstart guides work together to create a final Data Mesh product ready for analysis. The first two guides can be completed in any order, but you'll need to create both the Taxi Domain and the Weather Domain before creating a Data Mesh.

You'll use publicly available NYC weather and taxi cab data to do some useful analysis and explore a few insights using SQL and PySpark transforms.

The Taxi Domain is the first data product. It pulls from publicly available NYC taxi cab data. Within this quickstart guide, you'll learn how to create connections, Read Connectors, SQL Transforms, and Data Feeds.

The Weather Domain is the second data product. It pulls from publicly available NYC weather cab data. Within this quickstart guide, you'll replicate the steps from creating a Taxi Domain to create connections, Read Connectors, SQL Transforms, and Data Feeds specific to the data ingested.

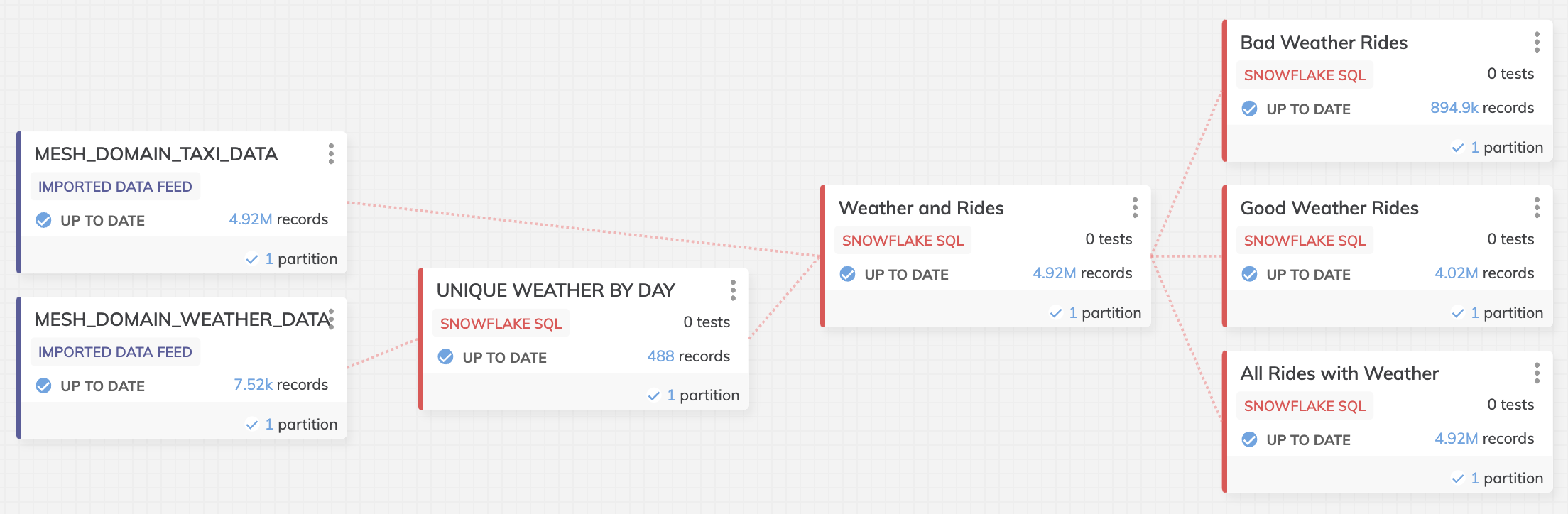

Finally, you'll create a Data mesh. To ingest data, you'll subscribe to each of the Data Feeds in both the Taxi Domain and the Weather Domain. The final transforms will contain analysis-ready data and look like the dataflow below:

Updated 8 months ago