AWS Redshift Write Connector (Legacy)

Creating an AWS Redshift Write Connector

Prerequisites:

- Access credentials

- Data location on Amazon Redshift

- Data location on Amazon S3

- Partition column from upstream, if applicable

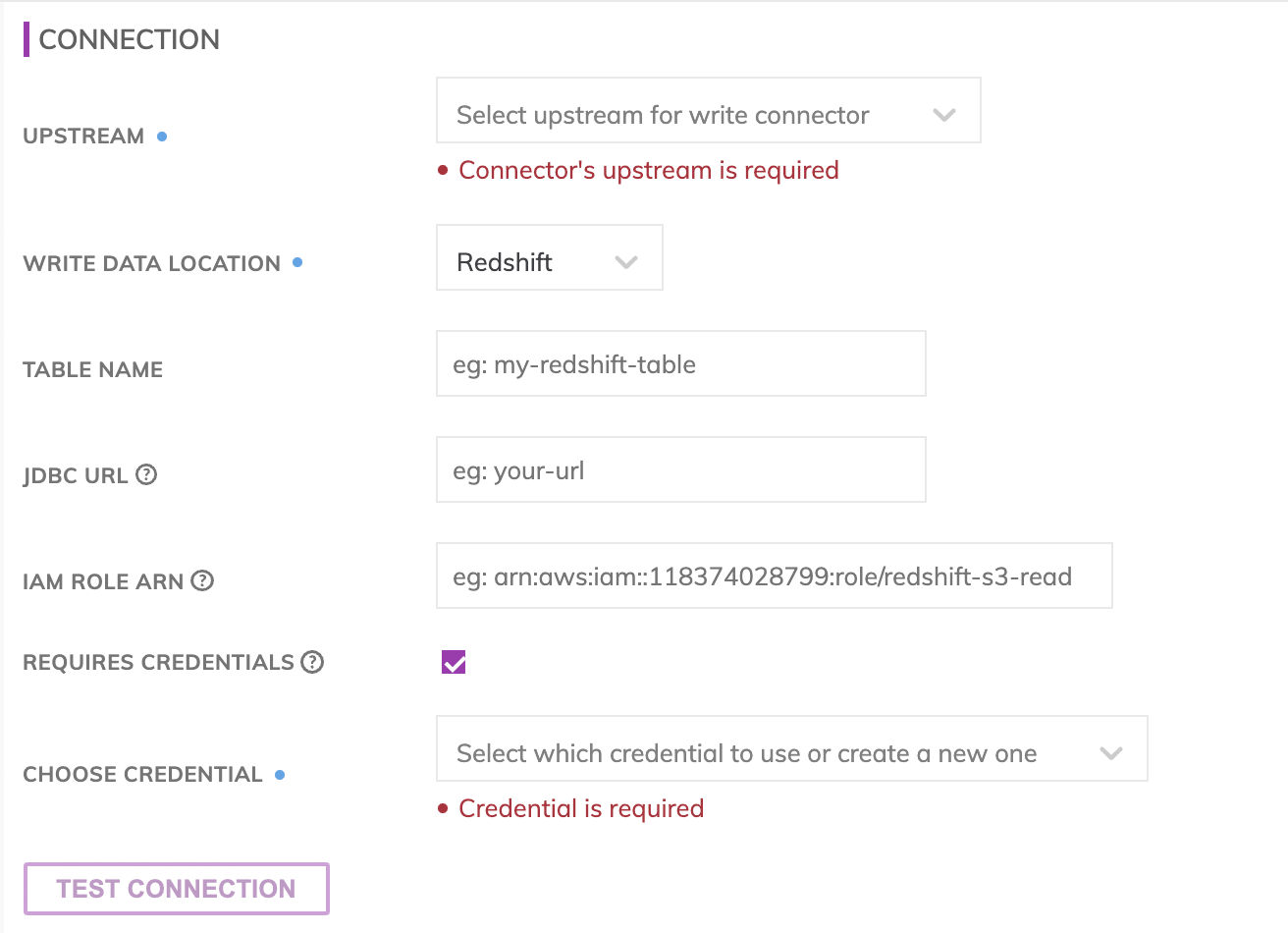

Specify the Upstream and Redshift dataset information

SuggestionFor optimal performance ensure the upstream transform is partitioned.

Redshift connection details

Specify the Redshift connection details. These includes how to connect to AWS Redshift cluster as well as where data resides within the Redshift cluster.

- Table Name: The Redshift table in which data will get pushed (e.g. my_data_warehouse_schema.my_awesome_table.) Please note: The table will be created if it does not already exist and will be located under the PUBLIC schema if no schema is specified.

- JDBC URL: The JDBC connection string to the Redshift cluster (e.g. jdbc:redshift://my-data-warehouse-redshift.cdmixszaipsh.us-east-1.redshift.amazonaws.com:5439/dev.) Include the database name at the end of the connection string (e.g. dev in this example.)

- Username/Password: The username and password of the Redshift user. Refer to AWS documentation on how to create a user in Redshift.

- IAM Role ARN: Role ARN for an IAM role that has both read and write access to the staging S3 location specified in the S3 data location section. This IAM role will need to be associated to the Redshift cluster. Please refer to AWS documentation on how to associate an IAM role with a Redshift cluster.

All Redshift connections are SSL encrypted.

Redshift ConnectivityFor Redshift clusters with public IPs, Ascend requires IP whitelisting in order to make a connection to the Redshift cluster. Please contact Ascend support at [email protected] for Ascend's static egress IP.

For Redshift clusters with private IPs, Ascend can connect to the Redshift cluster via a bastion server. Please contact Ascend support at [email protected] to get this set up.

Testing Redshift permissions

Use Test Connection to check whether all Redshift permissions are correctly configured.

Please note that the s3 access for the IAM Role mentioned above will need to be tested separately in AWS as that's role for the Redshift cluster to associate with and Ascend will not be able to assume that role.

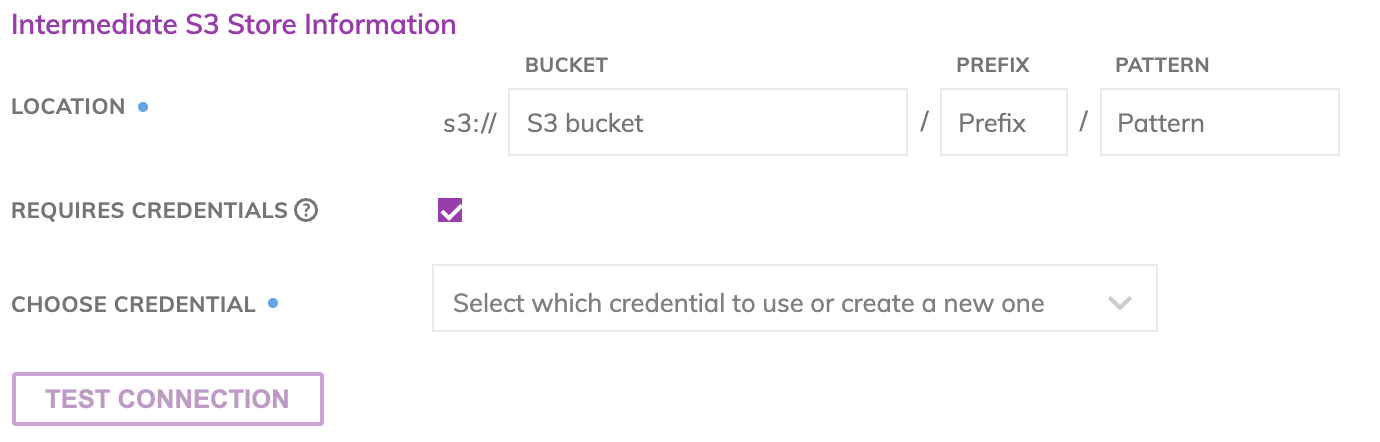

S3 data location

AWS S3 is used as a staging area prior to pushing data into Redshift. This requires configuring an S3 write connector. Specify under the Intermediate S3 Store Information section the S3 path where the data files will be created.

- Bucket: The S3 bucket name, e.g. my_data_bucket. Do not put in any folder information in this box.

- Object Prefix: The S3 folders hierarchical prefix, e.g. good_data/my_awesome_table. Do not include any leading forward slashes.

- Partition Folder Pattern (Optional): The folder pattern that will be generated based on the values from the upstream partition column, e.g. you can use {{at_hour_ts(yyyy-MM-dd/HH)}} where column at_hour_ts is a partition column from the upstream transform.

WarningAny data that is not being propagated by the upstream transform will automatically be deleted under the object prefix.

For example, if the write connector produces three files A, B and C under the example object-prefix good_data/my_awesome_table above and there was an existing file called output.txt at the same location Ascend will delete output.txt since Ascend did not generate it.

IAM access

Enter the Access Key and Secret Key for the IAM User which has both read and write access to the path specified.

Testing Connection

Use Test Connection to check whether all S3 permissions are correctly configured.

Schema Mismatch

You can select from 3 update strategies for scenarios where the schema of the upstream transform changes.

- Stop and display error: Will stop propagating new data to the Redshift table and raise an error in the UI.

- Recreate table: Delete the old Redshift table and create a new table that reflects the new schema. Please note: Any manual changes to the old table outside of the Ascend environment, such as table specific permissions, will be lost as the newly created table won't mirror the changes from the old table.

- Alter table: Send an alter table command to Redshift in an attempt to update the schema without deleting the original Redshift table such that any changes to the table outside of the Ascend (e.g. user permission, views, etc.) can be preserved. If Alter columns on type mismatch is checked, the alter table command will attempt to change the data type of the column as well. If Drop unknown columns is checked, then any columns added to the table manually outside of the Ascend environment will be removed as a result of the update. The default is both options checked.

ImportantWhen Alter table option is selected and the schema of the upstream transform has changed, all rows in the table will be deleted and new rows will be generated.

AtomicityAscend updates Redshift tables atomically. This means if an incremental update fails, the applications consuming the Redshift data will see the last completed and successful update. No leftover data from a failed update will be exposed to the Redshift consumers.

Updated 8 months ago