Write Connector

Prerequisites

- Access credentials

- Data location on AWS S3

- Partition column from upstream, if applicable

Create New Write Connector

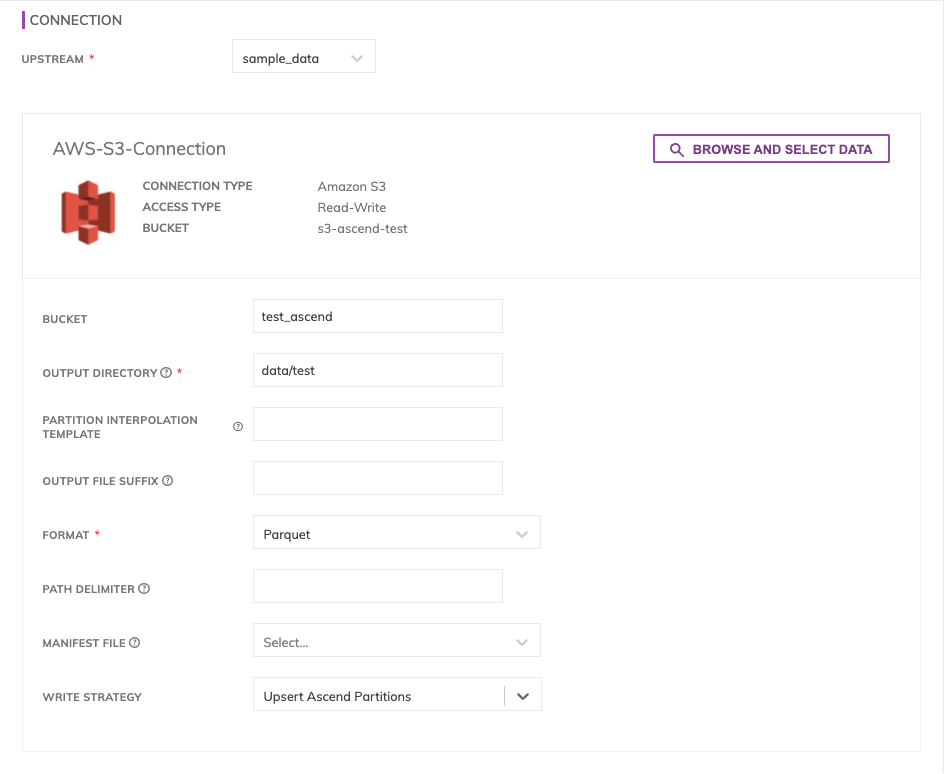

Figure 1

Connection Options

- Upstream (required): Pick upstream component from which data will be written.

- Browse and Select Data: Select location withing S3 bucket to write data. This will automatically fille in the Bucket and Output Directory fields.

- Bucket (required): Bucket which output data will be written to.

- Output Directory (required): Directory within bucket to write the data. If the folder does not exist, it will be created.

- Partition Interpolation Template: Include a value from the partition profile as part of the output directory naming. For example, to create Hive style partitioning on dataset daily partitioned on timestamp 'event_ts', specify the pattern as

dt={{event_ts(yyyy-MM-dd)}}/. - Output File Suffix: A suffix to attach to each file name. By default, Ascend will include the extension of the file format, but you may optionally choose a different suffix.

- Format (required): Pick the format for the output files:

- Avro

- CSV

- JSON

- ORC

- Parquet

- Text

- Path Delimiter: The delimiter to use in joining path segments, defaults to '/'.

- Manifest File: Specify a manifest file which will be updated with the list of files every time they are updated.

- Write Strategy: Pick the strategy for writing files in the storage:

- Default (Mirror to Blob Store): this strategy allows to keep the storage aligned with ascend. allows inserting, updating and deleting partitions on the blob store.

- Ascend Upsert Partitions: This strategy allows for appending new partitions in Ascend and updating existing partitions, without deleting partitions from blob store that are no longer in Ascend.

- Custom Function: This strategy allows you to implement the write logic that'll be executed by Ascend.

Updated about 1 year ago