Google Sheets Read Connector

Create New Read Connector

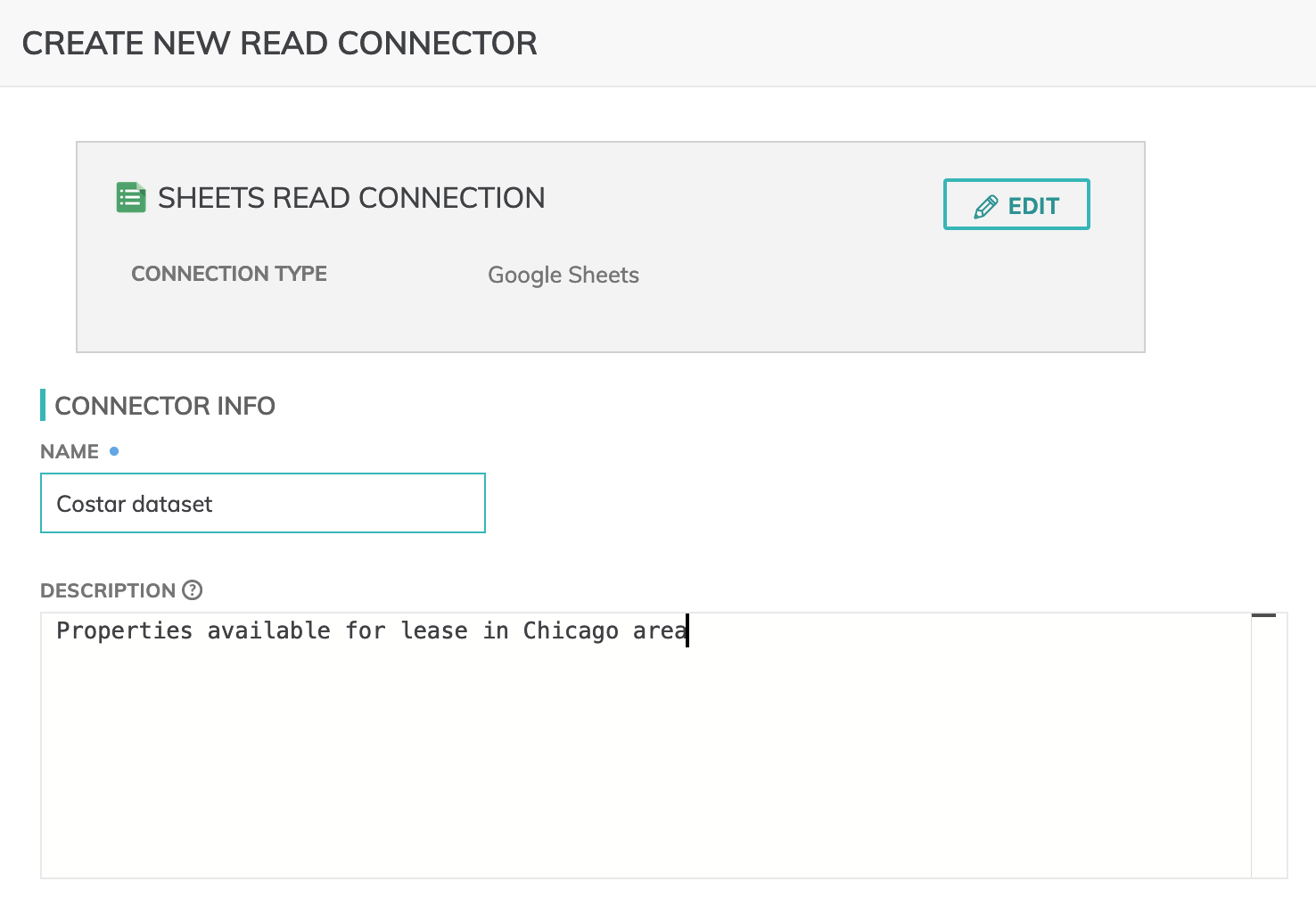

Figure 1

The first thing on the top (Figure 1 above) is a highlighted box with the Google Sheets connection, with an EDIT button which can use to modify the connection.

CONNECTOR INFO

- Name (required): The name to identify this connector with.

- Description (optional): Description of what data this connector will read.

Connector Configuration

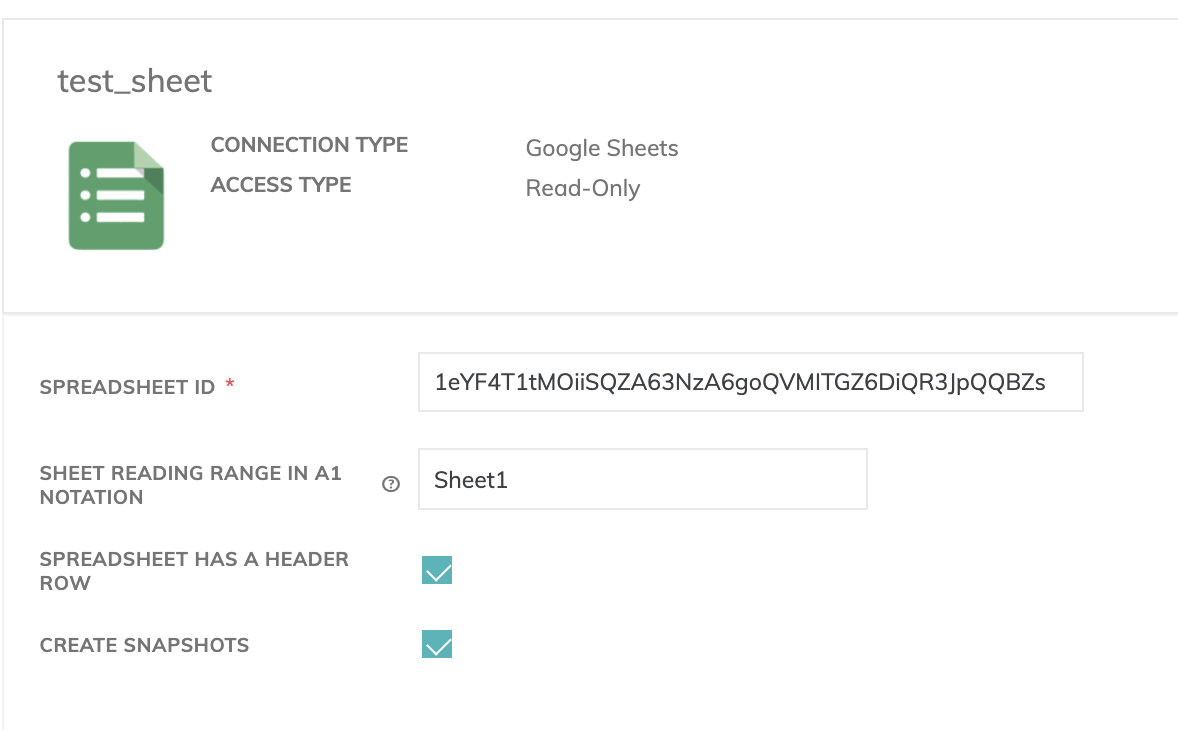

Figure 2

- Browse Connection (required): Click this button to explore resource and locate assets to ingest. This will give you access to the Google Sheets in a modal dialog (Figure 7 below), where you can navigate to the desired spreadsheet to be imported. Select the spreadsheet you want to ingest and press confirm.

Note: Each spreadsheet has to be shared with the mail associated to the gcp service account that has been used in the connection. - Sheet Reading Range in A1 Notation *: Select the columns and rows to be read. A tutorial on A1 notation can be found here: https://developers.google.com/sheets/api/guides/concepts

- Spreadsheet has a Header row: Use the first row of the spreadsheet as column names of the dataframe

- Create Snapshot *: Add a column named

snapshot_tsto the DataFrame schema.

At refresh time, the content of the spreadsheet is copied into a new partition and the refresh time is stored insnapshot_ts-- this pattern allows one to store the spreadsheet over time, snapshotted at each refresh time.

Note:snapshot_tsis a required column - Hence, despite a user could remove it manually, it should be part of the schema.

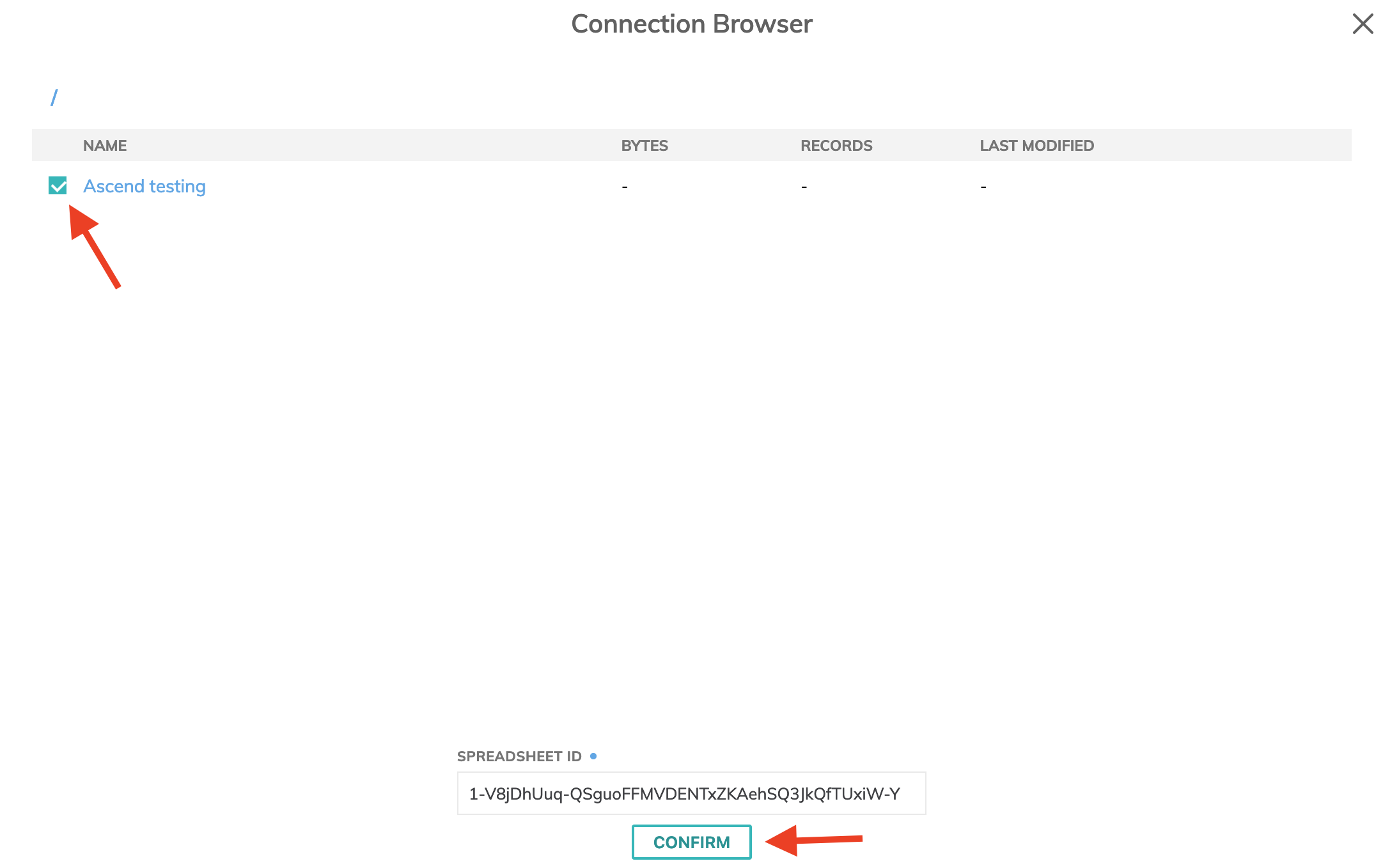

Figure 3

Generate Schema

Once you click on the GENERATE SCHEMA button, the parser will create a schema and a data preview will be populated as in the image below.

- Add schema column: Add a custom column to the generated schema

Component Pausing

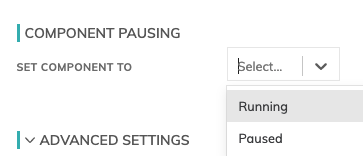

Figure 4

Update the status of the read connector by marking it either Running to mark it active or Paused to pause the connector from running.

Refresh Schedule

The refresh schedule specifies how often Ascend checks the data location to see if there's new data. Ascend will automatically kick off the corresponding big data jobs once new or updated data is discovered.

Figure 5

Processing Priority (optional)

When resources are constrained, Processing Priority will be used to determine which components to schedule first.

Higher priority numbers are scheduled before lower ones. Increasing the priority on a component also causes all its upstream components to be prioritized higher. Negative priorities can be used to postpone work until excess capacity becomes available.

Figure 6

Updated 6 months ago